Part II in Our Series on the Resurgency of Artificial Intelligence

Part II in Our Series on the Resurgency of Artificial Intelligence

In Part I of this series we pointed to the importance of large electronic knowledge bases (Big Data) in the recent advances in AI for knowledge- and text-based systems. Amongst other factors such as speedy GPUs and algorithm advances, we noted that electronic knowledge bases are perhaps the most important factor in the resurgence of artificial intelligence.

But the real question is not what the most important factor in the resurgence of AI may be — though the answer to that points to vetted, reference training sources. The real question is: How can we systematize this understanding by improving the usefulness of knowledge bases to support AI machine learning? Knowing that knowledge bases are important is not enough. If we can better understand what promotes — and what hinders — KBs for machine learning, perhaps we can design KB approaches that are even quicker and more effective for AI. In short, what should we be coding to promote knowledge-based artificial intelligence (KBAI)?

Why is There Not a Systematic Approach to Using KBs in AI?

To probe this question, let’s take the case of Wikipedia, the most important of the knowledge bases for AI machine learning purposes. According to Google Scholar, there have been more than 15,000 research articles relating Wikipedia to machine learning or artificial intelligence [1]. Growth in articles noting the use of Wikipedia for AI has been particularly strong in the past five years [1].

But two things are remarkable about this use of Wikipedia. First, virtually every one of these papers is a one-off. Each project stages and uses Wikipedia in its own way and with its own methodology and focus. Second, Wikipedia is able to make its contributions despite the fact there are many weaknesses and gaps within the knowledge base itself. Clearly, despite weaknesses, the availability of such large-scale knowledge in electronic form still provides significant advantages to AI and machine learning in the realm of natural language applications and text understanding. How much better might our learners be if we fed them more complete and coherent information?

Readers of this blog will be familiar with my periodic criticisms of Wikipedia as a structured knowledge resource [2]. These criticisms are not related to the general scope and coverage of Wikipedia, which, overall, is remarkable and unprecedented in human endeavor. Rather, the criticisms relate to the use of Wikipedia as is for knowledge representation purposes. To recap, here are some of the weaknesses of Wikipedia as a knowledge resource for AI:

- Incoherency — the category structure of Wikipedia is particularly problematic. More than 60% of existing Wikipedia categories are not true (or “natural”) categories at all, but represent groupings more of convenience or compound attributes (such as Films directed by Pedro Almodóvar or Ambassadors of the United States to Mexico) [3]

- Incomplete structure — attributes, as presented in Wikipedia’s infoboxes, are incomplete within and across entities, and links also have gaps. Wikidata offers promise to help bring greater consistency, but much will need to be achieved with bots and provenance remains an issue

- Incomplete coverage — the coverage and scope of Wikipedia are spotty, especially across language versions, and in any case the entities and concepts covered need to meet Wikipedia’s notability guidelines. For much domain analysis, Wikipedia’s domain coverage is inadequate. It would also be helpful if there were ways to extend the KB’s coverage for local or enterprise purposes

- Inaccuracies — actually, given its crowdsourced nature, popular portions of Wikipedia are fairly vetted for accuracy. Peripheral or stub aspects of Wikipedia, however, may retain inaccuracies of coverage, tone or representation.

As the tremendous use of Wikipedia for research shows, none of these weaknesses is fatal, and none alone has prevented meaningful use of the knowledge base. Further, there is much active research in areas such as knowledge base population [4] that promise to aid solutions to some of these weaknesses. The recognition of the success of knowledge bases to train AI machine learners is also now increasing awareness that KB design for AI purposes is a worthwhile research topic in its own right. Much is happening leveraging AI in bot designs for both Wikipedia and Wikidata. A better understanding of how to test and ensure coherency, matched with a knowledge graph for inferencing and logic, should help promote better and faster AI learners. The same techniques for testing consistency and coherence may be applied to mapping external KBs into such a reference structure.

Thus, the real question again is: How can we systematize the usefulness of knowledge bases to support AI machine learning? Simply by asking this question we can alter our mindset to discover readily available ways to improve knowledge bases for KBAI purposes.

Working Backwards from the Needs of Machine Learners

The best perspective to take on how to optimize knowledge bases for artificial intelligence derives from the needs of the machine learners. Not all individual learners have all of these needs, but from the perspective of a “platform” or “factory” for machine learners, the knowledge base and supporting structures should consider all of these factors:

- features — are the raw input to machine learners, and may be evident, such as attributes, syntax or semantics, or may be hidden or “latent” [5]. A knowledge base such as Wikipedia can expose literally hundreds of different feature sets [5]. Of course, only a few of these feature types are useful for a given learning task, and many duplicate ones provide nearly similar “signals”. But across multiple learning tasks, many different feature types are desirable and can be made available to learners

- knowledge graph — is the schematic representation of the KB domain, and is the basis for setting the coherency and logic and inference structure for the represented knowledge. The knowledge graph, provided in the form of an ontology, is the means by which logical slices can be identified and cleaved for such areas as entity type selection or the segregation of training sets. In situations like Wikipedia where the existing category structure is often incoherent, re-expressing the existing knowledge into an existing and proven schema is one viable approach

- positive and negative training sets — for supervised learning, positive training sets provide a group of labeled, desired outputs, while negative training sets are similar in most respects but do not meet the desired conditions. The training sets provide the labeled outputs to which the machine learner is trained. Provision of both negative and positive sets is helpful, and the accuracy of the learner is in part a function of how “correct” the labeled training sets are

- reference (“gold”) standards — vetted reference results, which are really validated training sets and therefore more rigorous to produce, are important to test the precision, recall and accuracy of machine learners [6]. This verification is essential during the process of testing the usefulness of various input features as well as model parameters. Without known standards, it is hard to converge many learners for effective predictions

- keeping the KBs current — the nature of knowledge is that is it is constantly changing and growing. As a result, knowledge bases used in KBAI are constantly in flux. The restructuring and feature set generation from the knowledge base must be updated on a periodic basis. Keeping KBs current means that the overall process of staging knowledge bases for KBAI purposes must be made systematic through the use of scripts, build routines and validation tests. General administrative and management capabilities are also critical, and

- repeatability — all of these steps must be repeatable, since new iterations of the knowledge base must retain coherency and consistency.

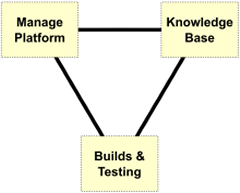

Thus, an effective knowledge base to support KBAI should have a number of desirable aspects. It should have maximum structure and exposed features. It should be organized by a coherent knowledge graph, which can be effectively sliced and reasoned over. It must be testable via logic and consistency and performance tests, such that training and reference sets may be vetted and refined. And it must have repeatable and verifiable scripts for updating the underlying KB as it changes and to generate new, working feature sets. Moreover, means for generally managing and manipulating the knowledge base and knowledge graph are important. These desirable aspects constitute a triad of required functionality.

Guidance for a Research Agenda

Achieving a purposeful knowledge base for KBAI uses is neither a stretch nor technically risky. We see the broad outlines of the requirements in the discussion above.

Two-thirds of the triad are relatively straightforward. First, creating a platform for managing the KBs is a fairly standard requirement for knowledge and semantics purposes; many platforms presently exist that can access and manage knowledge graphs, knowledge bases, and instance data at the necessary scales. Second, the build and testing scripts do require systematic attention, but these are also not difficult tasks and are quite common in many settings. It is true that build and testing scripts can often prove brittle, so care needs to be placed into their design to facilitate maintainability. Fortunately, these are matters mostly of proper focus and good practice, and not conceptually difficult.

The major challenges reside in the third leg of the triad, namely in the twin needs to map the knowledge base into a coherent knowledge graph and into an underlying speculative grammar that is logically computable to support the expression of both feature and training sets. A variety of upper ontologies and lexical frameworks such as WordNet have been tried as the guiding graph structures for knowledge bases [7]. To my knowledge, none of these options has been scrutinized with the specific requirements of KBAI support in mind. With respect to the other twin need, that of a speculative grammar, our research to date [8] points to the importance of segregating the KB information into topics (concepts), relations and relation types, entities and entity types, and attributes and attribute types. The possible further distinctions, however, into possibly roles, annotations (metadata), events and expressions of mereology still require further research. The role, placement and use of rules also remain to be determined.