Self-service Information Management for Knowledge Workers

Self-service Information Management for Knowledge Workers

Though I have alluded to it numerous times in my past writings [1], I think one of the most pervasive and important benefits from semantic technologies in the enterprise will come from the democratization of information. These benefits will arise mostly from a fundamental change in how we manage and consume information. A new “system” of semantic technologies is now largely available that can put the collection, assembly, organization, analysis and presentation of information directly in the hands of those who need it most — the consumers of information.

The idea of “democratizing information” has been around for a couple of decades, and has accelerated in incidence since the dominance of the Internet. Most commonly, the idea is associated with developments and notions in such areas as citizen journalism, crowdsourcing, the wisdom of the crowd, social bookmarking (or collaborative tagging), and the democratic (small “d”) access to publishing via new channels such as blogs, microblogs (e.g., Twitter) and wikis. To be sure, these kinds of democratic information will (and are) benefiting from the use and application of semantics.

But the trend I’m focusing on here is much different and quite new. It is the idea that enterprise knowledge workers can now take ownership and control of their knowledge management functions. In the process, prior bottlenecks due to IT can be relieved and massive new benefits can open up to the enterprise.

Decades-long Mismatches Between KM and IT

– Tim Bray [2]

It is no secret that IT has not served the enterprise knowledge management function well for decades. Transaction systems and database systems geared to fast indexing and access to datum have not proved well suited to information or knowledge management. KM includes such applications as business intelligence, data warehousing, data integration and federation, enterprise information integration and management, competitive intelligence, knowledge representation, and so forth. Information management is a bit broader category, and adds such functions as document management, data management, enterprise content management, enterprise or controlled vocabularies, systems analysis, information standards and information assets management to the basic functions of KM. Since the purpose of this piece is not to get into the epistemological differences between information and knowledge, I use these terms more-or-less interchangeably herein.

Knowledge and information management is very big business. Given the breadth and differences in defining the KM and IM markets, let’s take as a proxy the business intelligence (BI) market, one of KM’s most important elements. Various estimates from IDC, Gartner and others place the current value of BI software sales somewhere in the range of $9 billion to $11 billion annually [3]. Further, BI ranked number five on the list of the top 10 technology priorities for chief information officers (CIOs) in 2011. And this pertains to the structured component of information alone.

Yet, at the same time, BI-related projects continue to have high failure rates, often cited as in the 65% to higher range [4]. These failure rates are consistent with KM projects in general [5]. These failures are merely one expression of a constant litany of issues and concerns regarding the enterprise KM function:

| Conventional KM Problem Area | Comments |

| Inflexible Reports |

|

| Inflexible Analysis |

|

| Schema Bottlenecks |

|

| ETL Bottlenecks |

|

| Reliance on Intermediaries |

|

| Specialized Expertise Required |

|

| Slow Response Time |

|

| Dependence on External Apps |

|

| Unmet Needs |

|

| High Opportunity Costs |

|

| High Failure Rates |

|

The seeming contradiction between continued growth and expenditures for information management coupled with continued high failure rates and disappointments is really an expression of the centrality of information to the modern enterprise. The funding and growth of the IT function is itself an expression of this centrality and perceived importance. These have been abiding trends in our transition to information or knowledge economies.

Bray [2] places the fault for wasted initiatives within the culture of IT. I believe there is some truth to this — variably, of course, depending on the specific enterprise. But the real culprit, I believe, has been the past need to “intermediate” a layer of software and IT expertise between knowledge workers and their source information. A progression of tasks has been necessary — conducted over decades with advances and learning — to get paper information into electronic form, get those forms to be understood and operate in some common ways, and then to develop tools, architectures and frameworks to make sense of it. Yet, as more tasks with required specialized skills have been added to this layer, the actual gulf between worker and information has increased. For example, enterprises still require the overhead and layers of IT to write SQL to get information out and then to prepare and fix reports.

On average, IT now consumes about 4% of all enterprise expenditures and employs about 6% of enterprise workers [6]. IT has become a very thick intermediary layer, indeed! Yet, because of the advances and learning that has occurred in growing and nurturing this layer, we also now have the basis to begin to “disintermediate” the IT layer. Many, if not all, of the challenges noted in the table above can be improved by doing so.

Early Attempts at Self-service and Semantics

One current buzzword in business intelligence is “self service”. By this term is meant giving knowledge workers the tools and systems for creating reports or doing analysis on their own without needing to work through (or be frustrated by) the IT layer. Self-service software was first postulated in the 1990s as a way for information consumers and authors (typically subject-matter experts) to automate some of their knowledge management tasks. Today, it is most commonly applied to self-service reporting or self-service analytics within the BI realm.

As a general proposition, self-service BI has been more myth than reality [7]. Forrester surveys, for example, indicate that IT still develops most BI applications. Of survey respondents in 2009, 70% responded that IT develops the enterprise’s reports and dashboards [8]. However, that figure is not 100%, as it was just a decade earlier, and there is also notable success to some open source providers such as BIRT that address a wide range of reporting needs within a typical application, ranging from operational or enterprise reporting to multi-dimensional online analytical processing (OLAP).

James Kobelius [8] is particularly bullish on the application of Web 2.0 “mashup” applications to knowledge worker purposes. Under this approach, Web-based applications are used and accessed directly by knowledge workers for charting and mapping purposes using Ajax or Flash widgets, such as Google Maps. The conventional BI and KM vendors have begun to more more aggressively into this area. Some notable new entrants — such as Tableau, Factual or Good Data — are also showing the way to more direct access, more flexible reporting and analysis widgets, and cleaner service or platform designs.

These initiatives reside at the display or reporting level. There is another group, including James Kobelius, Neil Raden or Seth Earley, that have addressed how to get disparate information to talk together using ontologies. They refer to “semanticizing” such traditional practices such as master data management (MDM), “ontologizing” taxonomies, or adding Web 2.0 mashups to business intelligence. While these thoughts are moving in the right direction, and will bring incremental benefits, they still are far short of the potentials at hand.

Self-service Information Management

So far, in the KM realm, the application of semantics has tended to be limited to information extraction (tagging) of text documents and first attempts at using ontologies. The tagging component is essential to enable the 80% of information presently in textual documents to become first-class citizens within business intelligence or knowledge management. The ontology efforts to date appear to be more like thin veneers over traditional taxonomies. Rather than hierarchical structures, we now see graph-oriented ones, but still intended to fulfill the same tasks of enterprise metadata and vocabulary lookups.

The ontology efforts especially are just nibbling around the edges of what can be done with semantic technologies. Rather than looking upon ontologies as just another dictionary (though that role is true), if we re-orient our thinking to make ontologies central to the KM function, a wealth of new opportunities and benefits arises.

A bit more than a year ago, we formulated the Seven Pillars of the Open Semantic Enterprise, which included ontologies and related as some of the central components. In that article [9], we noted the particular applicability of semantic technologies to the information and knowledge management functions within enterprises. We asserted the benefits for embracing the open semantic enterprise as providing the organization greater insights with lower risk, lower cost, faster deployment, and more agile responsiveness. Since that time we have been deploying such systems and documenting those benefits.

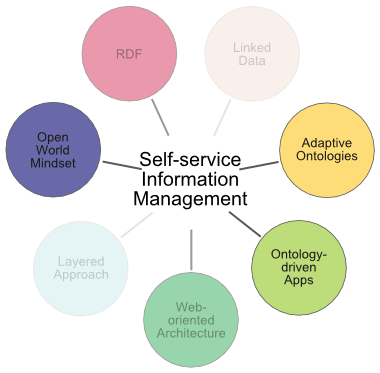

Integral to the seven pillars are those aspects that lead to the democratization of information for the knowledge worker, what combined might be called “self-service information management”. As the figure to the right shows, three of the seven pillars are essential building blocks to this capability, two pillars are further foundations to it, with the remaining two pillars only tangentially important.

What the combination of these pieces means is a fundamental change in how knowledge work is done. Through this approach, we can largely disintermediate IT from the knowledge function, can bring knowledge management directly into the hands of those who need it in real time, and fundamentally alter how knowledge management apps are designed and deployed. The best thing is these benefits are an incremental evolution, and retain the use and value of existing information assets.

Building Block #1: Adaptive Ontologies

Rather than peripheral lookup structures or thin veneers, ontologies play the central role in the design of self-service information management. We use the plural on purpose here: what is deployed is actually a library of complementary and modular ontologies that play a variety of roles. Combined, we call these libraries with their representative functions adaptive ontologies.

This library contains the expected and conventional domain ontologies. These represent the actual knowledge space for the domain at hand, and may be comprised of multiple different ontologies representing different domain or knowledge spaces. These standard semantic Web ontologies may range from the small and simple to the large and complex, and may perform the roles of defining relationships among concepts, integrating instance data, orienting to other knowledge and domains, or mapping to other schema.

From a best practices standpoint [10], we take special care in constructing these domain ontologies such that we provide labels and cues for user interfaces. Some of the user interface considerations that can be driven by adaptive ontologies include: attribute labels and tooltips; navigation and browsing structures and trees; menu structures; auto-completion of entered data; contextual dropdown list choices; spell checkers; online help systems; etc. We also include a variety of synonyms and aliases (the combination of which we call semsets) for referring to concepts and instances in multiple ways and for aiding information extraction and tagging functions. (In addition to organizing and helping to interoperate contributing information, these domain ontologies are also used for what is called ontology-based information extraction (OBIE) via our scones [11] system.)

In addition the library of adaptive ontologies includes some administrative ontologies that guide how instance data can be imported and inter-related (via the Instance Record Object Notation, or irON); what information types drive what widgets (via the Semantic Component Ontology, or SCO); data mapping vocabularies (UMBEL Vocabulary); how to characterize datasets; and other potential specialty functionality.

A forthcoming article will describe the composition and modularity typically found in a library of these adaptive ontologies.

In combination, these adaptive ontologies are, in effect, the “brains” of the self-service system. The best aspect of these ontologies is that they can be understood, created and maintained by knowledge workers. They constitute the only specification (other than theming, if desired) necessary to create self-service knowledge management environments.

Building Block #2: Ontology-driven Apps

The piece of the puzzle that implements the instruction sets within these adaptive ontologies are the ontology-driven apps, or ODapps. A recent article describes these structures in some detail [12].

ODapps are modular, generic software applications designed to operate in accordance with the specifications contained in the adaptive ontologies. ODapps fulfill specific generic tasks, consistent with their dedicated design to respond to adaptive ontologies. For example, current ontology-driven apps include imports and exports in various formats, dataset creation and management, data record creation and management, reporting, browsing, searching, data visualization and manipulation (through libraries of what we call semantic components), user access rights and permissions, and similar. These applications provide their specific functionality in response to the specifications in the ontologies fed to them.

ODapps are designed more similarly to widgets or API-based frameworks than to the dedicated software of the past, though the dedicated functionality (e.g., graphing, reporting, etc.) is obviously quite similar. The major change in these ontology-driven apps is to accommodate a relatively common abstraction layer that responds to the structure and conventions of the guiding ontologies. The major advantage is that single generic applications can supply shared functionality based on any properly constructed adaptive ontology.

Generic functionality included in these ODapps are things like filtering, setting value ranges, choosing the specific display view, and invoking or not various display templates (akin to the infoboxes on Wikipedia). By nature of the data and the ontologies submitted to them, the ODapp signals to the user or consumer what displays, views, filters or slices-and-dices might be available to them. Fed different data and different ontologies, the ODapp would signal the user differently.

Because of their generic design, driven by the ontologies, only a relatively small number of ODapps needs to be created. Once created with appropriate generic functionality, application development is essentially over. It is through the additions and changes to the adaptive ontologies — done by knowledge workers themselves — that new capability and structure gets exposed through these ontology-driven apps. This innovation shifts the locus from software and programming to data and knowledge structures.

This democratization of IT means that everything in the knowledge management realm can become self service. Users and consumers can create their own analyses; develop their own reports; and package and disseminate what they and their colleagues need, when they need it. Through ontology-driven apps and adaptive ontologies, we turn prior software engineering practice on its head.

Building Block #3: Open World Assumption

Integral to this design is the embrace of the open world assumption [13]. Though not a specific artifact, as are adaptive ontologies or ODapps, the open-world approach is the logical underpinning that allows consumers or knowledge workers to add new information to the system as it is discovered or scoped. This nuance may sound esoteric, but traditional KM systems have a very different underpinning that leads to some nasty implications.

Because the predominant share of KM systems are based on relational database systems, they embody a closed-world design. This works well for transaction systems or environments where the information domain is known and bounded, but does not apply to knowledge and changing information. Moreover, the schema that govern closed-world designs are brittle and hard to change and manage. It is this fact that has put KM squarely in the bailiwick of IT and has often led to delays and frustrations. Re-architecting or adding new schema views to an existing closed-world system can be fiendishly difficult.

This difficulty is a major reason why IT resists casual or constant changes to underlying data schema. Unfortunately, this makes these brittle schema difficult to extend and therefore generally unresponsive to changing and growing knowledge. As an environment for knowledge management, the relational data system and the closed-world approach are lousy foundations.

Other Building Blocks

As the self-service information management diagram above shows, RDF and Web services are two further important foundations. RDF (Resource Description Framework) is the canonical data model upon which all input information is represented. This means that the ODapp tools and the adaptive ontologies can work off a single model of knowledge representation. The Web service and architecture component is also helpful in that it allows Web 2.0 technologies to be brought to bear and allows distributed sources and users for the KM system. This provides scalability and distributed applicability, including on smartphones.

The other two pillars of the open semantic enterprise — the layered approach and linked data — are also helpful, but not necessarily integral to the KM and self-service perspectives presented herein.

Benefits from Self-service Information Management

The benefits and flexibilities from self-service information management extend from top to bottom; from creating data and content to publishing and deploying it. Here is a listing of available potentials for self-service, drawing comparison to the current conventional approach dependent on IT:

| Information Activity | Conventional Approach (IT) | Self-service Information Management |

| Creating |

|

|

| Annotating |

|

|

| Analyzing |

|

|

| Reporting |

|

|

| Visualizing |

|

|

| Collaborating |

|

|

| Validating |

|

|

| Publishing |

|

|

| Re-purposing |

|

|

| New Functionality |

|

|

| Developing Apps |

|

|

| Dashboarding |

|

|

The fact that any source — internal or external — or format — unstructured, semi-structured and structured — can be brought together with semantic technologies is a qualitative boost over existing KM approaches. Further, all information is exposed in simple text formats, which means it can be readily manipulated and managed with easy to understand tools and applications. Reliance on open standards and languages by semantic technologies also leads to greater use and availability of open source systems.

In short, self-service information management approaches should be cheaper, faster, more responsive and more capable than current approaches.

Great Progress, with Ontology Management the Next Challenge

Given these perspectives, hearing someone tout data-driven applications or advocate ontologies merely for metadata matching sounds positively Neanderthal. The prospects we have with semantic technologies, ontology-driven apps, and self-service information management systems mean so much more. The prospect at hand is to remake the entire knowledge management function, in the process bringing all aspects from creating and distributing knowledge products into the direct hands of the user. This is truly the democratization of information!

The absolutely fantastic news is none of this is theoretical or in the future. All pieces are presently proven, working and in hand. This is a practical vision, ready today.

Granted, like any new innovation, especially one that is infrastructural and systems-oriented, there are some weak or less-developed parts. These current gaps and needs include:

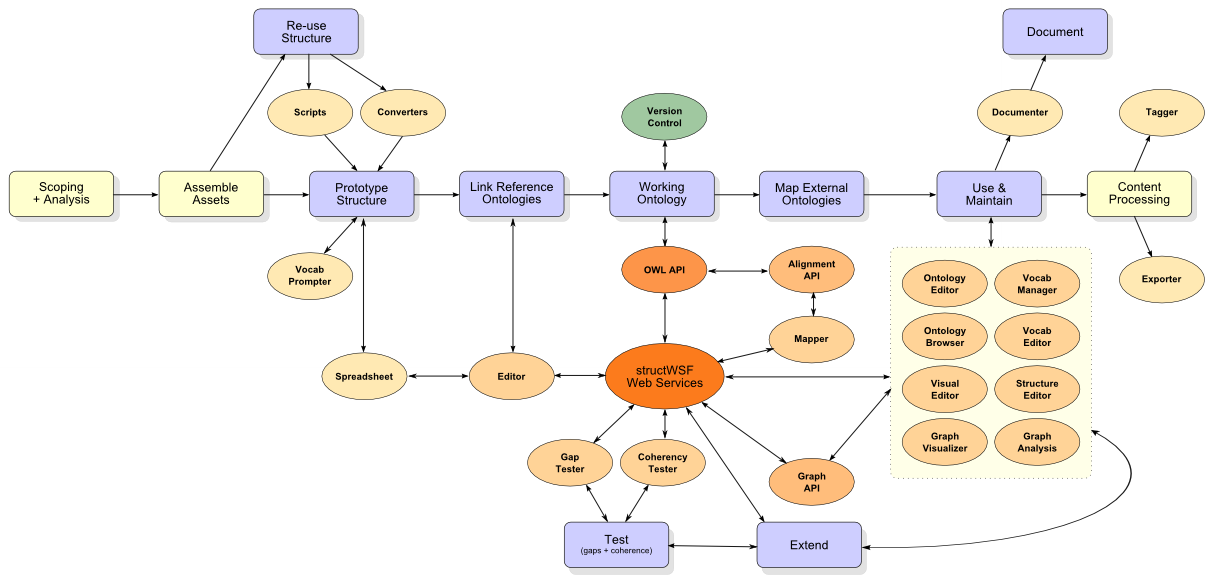

- Though tools exist, the state of ontology create, edit, manage, update, delete, map and validate tools could be greatly improved [14]. As the central drivers for ODapps, a simplification of tasks geared more to the knowledge worker, and not professional ontologists, is needed (see diagram to right for some of the needed functions). Some of these developments are underway, with more desired

- A relatively complete starting set of about 20 ODapps widgets is presently available. However, more are needed and for different deployment environments. BI analysis remains one weak area, as is an Ajax-based library

- The number of infobox templates is small, and better (WYSIWYG or graphical) create and manage utilities would be most useful, and

- User permission and authorization protocols exist, but are IP-based at present and could be beneficially expanded for different environments and use cases.

Yet, in the grand scheme of things, these gaps are relatively insignificant. The path and general architecture and design for moving forward are now clear.

Self-service information management via appropriately designed semantic technologies is now a reality. It promises to fulfill a vision of information access and control that has been frustrated for decades. We think these are exciting developments for the enterprise — and for the individual knowledge hound. We welcome your inquiries and invite you to join our open OSF group to contribute your ideas.

I think this is the first time I’ve been so quickly rewarded for hitting F5 a couple dozen times a day for the last 5 days…

I began a message to you recently,

I take that last statement back. I was about to sit down and begin the initial prototype of what I’ve learned to call “ontologies” from your blog. Not so long ago I was floored by reading “This Blogasbörd” and discovering in it almost blow by blow the exact same thought processes in which I had just lain out my concept of a component based super-architecture to my software engineering partner – by hand, with pen and ink – except that your manifesto seemed to have something to do with the Internet, taxonomies and unusual idiomatic uses of words I’d not hitherto associated with computer science.

I can certainly attest to the fact that there is nothing remotely friendly enough to a prospective inexperienced end-user in the vast array of software tools that seem to have been made for IT and programmer colleagues who don’t really need to use a workflow-enhancing front end to engineer in already-familiar markup languages.

This post has convinced me, though, to redouble my efforts to acquire the skills needed to at least convey to my software developer friends how to permanently encode sufficient domain expertise into their UX such that they needn’t walk their target stakeholders through concepts so familiar within their metier that they find it impossible to un-learn their habits of thought and see it all as would someone just stepping off a boat from 1900.

Of course, my first daunting challenge is to get my coder partner to create a word processing plug-in to my specs which will allow me to begin to markup my natural language neologisms and syllogisms with provisional URIs in a temporary, interim database so that the effort of typing up the remaining documentation I’ve hand-written in 23 spiral notebooks that haven’t yet been combined with the ludicrous swath of unstructured, non-semantically-annotated text documents flooding my file folders but instead becomes a way to coordinate normal human documentation, essays of design philosophy, use cases and architectural rationales while also creating at least the infant version of a controlled vocabulary of my own placeholder terminology which can loop back and provide hover-over pop-up referrent-precis-presentations of my nonce terms definitions and taxonomic relationships – saving normal humans from attempting to parse technical specifications from just this sort of logorhea. and allowing me to write in the succinct neologisms that condense entire paragraphs of abstruse and sadly standards-noncompliant ordinary human language without placing the burden of consulting a gigantic glossary at every glance.

In fact, apropos semantic metadata, the parallel perspectives available in a word processing plug-in of such features would gloss my provisional in-house neologisms with their associated reference concept plain text label as well as allowing developers and other technical stakeholders to simply peek at the underlying predicate logic – so quickly and easily embedded by me, a non-IT, non-info-science amateur with a project of unmanageably vast scope and concomitant data management needs, all for want of a single plug-in for a text editor.

I may have to skip over that particular desiderata and make a proof of concept “1st ever attempt at writing camel code XML gibberish” and, like a false flag cryptographer who pretends to not know the code they’re transmitting messages in has been cracked by the enemy, strike such pity as a SQL database expert can feel towards a fellow human and simply have me explain what my impressionist’s imitation of requirements spec document means face-to-face and then, like a magician, whip up a time-saving solution that’ll allow me to shave about 10.000 man-hours off my project duration estimate.

If you made it this far into what an overworked, neophyte ontology engineer has to say in the wee hours after another long night of abcedarian neologizing, I just want to give thanks again for your amazing blog which has either buttressed my outsider suspicions and naive “what ifs” every step of the way or granted me the exact piece of the puzzle I’d been missing, cogently providing by far the best explanations, expansive summarization and intellectual rationale for this odd new art which sadly seems to draw an inexcusably miniscule amount of attention to those looking in from the outside – i.e. those who most stand to gain by innovations in your field.

Thanks to you I stumbled onto the proper terms for all architectural spandrels I’d been relying on to firm up the sand castles I’d been building on my isolated stretch of the territory of make-believe. If I had an jot of mental reserves left over to parse inscrutable installation instructions I’d attempt to install conStruct on my domain’s server for the fourth time.

P.S. You manage to make a topic seemingly incapable of being bandied about at a cocktail party of slide-rule sales engineers urgent and fascinating. I thank you again for your timely, much-anticipated words of wisdom.