Constant Change Makes Adopting New Practices and Learning New Tools Essential

Constant Change Makes Adopting New Practices and Learning New Tools Essential

My business partner, Fred Giasson, has an uncanny ability to sense where the puck is heading. Perhaps that is due to his French Canadian heritage and his love of hockey. I suspect rather it is due to his having his finger firmly on the pulse.

When I first met Fred ten years ago he was already a seasoned veteran of the early semantic Web, with new innovative services at the time such as PingTheSemanticWeb under his belt. More recently, as we at Structured Dynamics began broadening from semantic technologies to more general artificial intelligence, Fred did his patient, quiet research and picked Clojure as our new official development language. We had much invested in our prior code base; switching main programming languages is always one of the most serious decisions a software shop can make. The choice has been brilliant, and our productivity has risen substantially. We are also able to exploit fundamentally new potentials based on a functional programming language that runs in the Java VM and has intellectual roots in Lisp.

As our work continues to shift more to knowledge bases and their use for mapping, classification, tagging, learning, etc., our challenges have been of a still different nature. Knowledge bases used in this manner are not only inherently open world because of the changing knowledge base, but because in staging them for machine learners and training sets, test, build and maintenance scripts and steps are constantly changing. Dealing with knowledge management brings substantial technical debt, and systems and procedures must be in place to deal with that. Literate programming is one means to help.

Because of Clojure and its REPL abilities that enable code to be interpreted and run dynamically at time of input, we also have been looking seriously at the notebook paradigm that has come out of interactive science lab books, and now has such exemplar programs such as iPython Notebook (Jupyter), Org-mode, Wolfram Alpha, Zeppelin, Gorilla, and others [1]. Fred had been interested in and poking at literate programming for quite a while, but his testing and use of Org-mode to keep track of our constant tests and revisions led him to take the question more seriously. You can see this example, a more-detailed example, or still another example of literate programming from Fred’s blog.

Literate programming is even a greater change than switching programming languages. To do literate programming right, there needs to be a focused commitment. To do literate programming right, workflows need to change and new tools must be learned. Is the effort worth it?

Literate Programming

Literate programming is a style of writing code and documentation first proposed by Donald Knuth. As any aspect of a coding effort is written — including its tests, configurations, installation, deployment, maintenance or experiments — written narrative and documentation accompanies it, explaining what it is, the logic of it, and what it is doing and how to exercise it. This documentation far exceeds the best-practices of in-line code commenting.

Literate programming narratives might provide background and thinking about what is being tested or tried, design objectives, workflow steps, recipes or whatever. The style and scope of documentation are similar to what might be expected in a scientist’s or inventor’s lab notebook. Indeed, the breed of emerging electronic notebooks, combined with REPL coding approaches, now enable interactive execution of functions and visualization and manipulation of results, including supporting macros.

Systems that support literate programming, such as Org-mode, can “tangle” their documents to extract the code portions for compilation and execution. They can also “weave” their documents to extract all of the documentation formatted for human readability, including using HTML. Some electronic systems can process multiple programming languages and translate functions. Some electronic systems have built-in spreadsheets and graphing libraries, and most open-source systems can be extended (though with varying degrees of difficulty and in different languages). Some of the systems are designed to interact with or publish Web pages.

Code and programs do not reside in isolation. Their operation needs to be explained and understood by others for bug fixing, use or maintenance. If they are processing systems, there are parameters and input data that are the result of much testing and refinement; they may need to be refined further again. Systems must be installed and deployed. Libraries and languages are frequently being updated for security and performance reasons; executables and environments need to be updated as well. When systems are updated, there are tests that need to be run to check for continued expected performance and accuracy. The severity of some updates may require revision to whole portions of the underlying systems. New employees need tech transfer and training and managers need to know how to take responsibility for the systems. These are all needs that literate programming can help support.

One may argue that transaction systems in more stable environments may have a lesser requirement for literate programming. But, in any knowledge-intensive or knowledge management scenario. the inherent open world nature of knowledge makes something like literate programming an imperative. Everything is in constant flux with a positive need for ongoing updates.

The objective of programmers should not be solely to write code, but to write systems that can be used and re-used to meet desired purposes at acceptable cost. Documentation is integral to that objective. Early experiments need to be improved, codified and documented such that they can be re-implemented across time and environment. Any revision of code needs to be accompanied by a revision or update to documentation. A lines-of-code (LOC) mentality is counter-productive to effective software for knowledge purposes. Literate programming is meant to be the workflow most conducive to achieve these ends.

The Nature of Knowledge

For quite some time now I have made the repeated argument that the nature of knowledge and knowledge management functions compel an emphasis on the open world assumption (OWA) [2]. Though it is a granddaddy of knowledge bases, let’s take the English Wikipedia as an example of why literate programming makes sense for knowledge management purposes. Let’s first look at the nature of Wikipedia itself, and then look at (next section) the various ways it must be processed for KBAI (knowledge-based artificial intelligence) purposes.

The nature of knowledge is that it is constantly changing. We learn new things, understand more about existing things, see relations and connections between things, and find knowledge in other arenas that causes us to re-think what we already knew. Such changes are the definition of an “open world” and pose major challenges to keeping information and systems up to date as these changes constantly flow in the background.

We can illustrate these points by looking at the changes in Wikipedia over a 20-month period from October 2012 to June 2014 [3]. Though growth of Wikipedia has been slowing somewhat since its peak growth years, the kinds of changes seen over this period are fairly indicative of the qualitative and structural changes that constantly affect knowledge.

First, the number of articles in the English Wikipedia (the largest, but only one of the 200+ Wikipedia language versions) increased 12% to over 4.6 million articles over the 20-month period, or greater than 0.6% per month. Actual article changes were greater than this amount. Total churn over the period was about 15.3%, with 13.8% totally new articles and 1.5% deleted articles [3].

Second, even greater changes occurred in Wikipedia’s category structure. More than 25% of the categories were net additions over this period. Another 4% were deleted [3]. Fairly significant categorical changes continue because of a concerted effort by the project to address category problems in the system.

And, third, edits of individual articles continued apace. Over this same period, more than 65 million edits were made to existing English articles, or about 0.75 edits per article per month [3]. Many of these changes, such as link or category or infobox assignments, affect the attributes or characteristics of the article subject, which has a direct effect on KBAI efforts. Also, even text changes affect many of the NLP-based methods for analyzing the knowledge base.

Granted, Wikipedia is perhaps an extreme example given its size and prominence. But the kinds of qualitative and substantive changes we see — new information, deletion of old information, adding or changing specifics to existing information, or changing how information is connected and organized — are common occurrences in any knowledge base.

The implications of working with knowledge bases are clear. KBs are constantly in flux. Single-event, static processing is dated as soon as the procedures are run. The only way to manage and use KB information comes from a commitment to constant processing and updates. Further, with each processing event, more is learned about the nature of the underlying information that causes the processing scripts and methods to need tweaking and refinement. Without detailed documentation of what has been done with prior processing and why, it is impossible to know how to best tweak next steps or to avoid dead-ends or mistakes of the past. KBAI processing can not be cost-effective and responsive without a memory. Literate programming, properly done, provides just that.

The Nature of Systems to Manage Knowledge

Of course, KBAI may also involve multiple input sources, all moving at different speeds of change. There are also multiple steps involved in processing and updating the input information, the “systems”, if you will, required to stage and use the information for artificial intelligence purposes. The artifacts associated with these activities range from functional code and code scripts; to parameter, configuration and build files; to the documentation of those files and scripts; to the logic of the systems; to the process and steps followed to achieve desired results; and to the documentation of the tests and alternatives investigated at any stage in the process. The kicker is that all of these components, without a systematic approach, will need updates, and conventional (non-literate) coding approaches will not be remembered easily, causing costly re-discovery and re-work.

We have tallied up at least ten major steps associated with a processing pipeline for KBAI purposes. I briefly describe each below so to better gain a flavor of this overall flux that needs to be captured by literate programming.

1. Updating Changing Knowledge

The section above dealt with this step, which is ensuring that the input knowledge bases to the overall KBAI process are current and accurate. Depending on the nature of the KM system, there may be multiple input KBs involved, each demanding updates. Besides capturing the changes in the base information itself, many of the steps below may also be required to properly process this changing input knowledge.

2. Processing Input KBs

For KBAI purposes, input KBs must be processed so as to be machine readable. Processing is also desirable to expose features for machine learners and to do other clean up of the input sources, such as removal of administrative categories and articles, cleaning up category structures, consolidating similar or duplicative inputs into canonical forms, and the like.

3. Installing, Running and Updating the System

The KBs themselves require host databases or triple stores. Each of the processing steps may have functional code or scripts associated with it. All general management systems need to be installed, kept current, and secured. The management of system infrastructure sometimes requires a staff of its own, let alone install, deploy, monitoring and update systems.

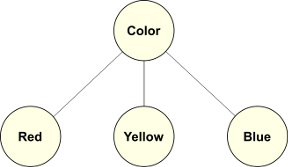

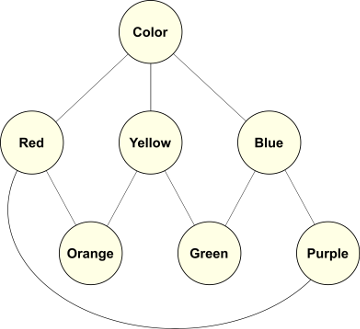

4. Testing and Vetting Placements

New entities and types added to the knowledge base need to be placed into the overall knowledge graph and tested for logical placement and connections. Though final placements should be manually verified, the sheer number of concepts in the system places a premium on semi-automatic tests and placements. Placement metrics are also important to help screen candidates.

5. Testing and Vetting Mappings

One key aspect of KBAI is its use in interoperating with other datasets and knowledge bases. As a result, new or updated concepts in the KB need to be tested and mapped with appropriate mapping predicates to external or supporting KBs. In the case of UMBEL, Structured Dynamics routinely attempts to map all concepts to Wikipedia (DBpedia), Cyc and Wikidata. Any changes to the base KB causes all of these mappings to be re-investigated and confirmed.

6. Testing and Vetting Assertions

Testing does not end with placements and mappings. Concepts are often characterized by attributes and values; they are often given internal assignments such as SuperTypes; and all of these assertions must be tested against what already exists in the KB. Though the tests may individually be fairly straightforward, there are thousands to test and cross-consistency is important. Each of these assertions is subject to unit tests.

7. Ensuring Completeness

As we have noted elsewhere, our KBAI best practices call for each new concept in the KB to be accompanied by a definition, complete characterization and connections, and synonyms or synsets to aid in NLP tasks. These requirements, too, require scripts and systems for completion.

8. Testing and Vetting Coherence

As the broader structure is built and extended, system tests are applied to ensure the overall graph remains coherent and that outliers, orphans and fragments are addressed. Some of this testing is done via component typologies, and some occurs using various network and graph analyses. Possible problems need to be flagged and presented for manual inspection. Like other manual vetting requirements, confidence scoring and ranking of problems and candidates speed up the screening process.

9. Generating Training Sets

A key objective of the KBAI approach is the creating of positive and negative training sets. Candidates need to be generated; they need to be scored and tested; and their final acceptance needs to be vetted. Once vetted, the training sets themselves may need to be expressed in different formats or structures (such as finite state transducers, one of the techniques we often use) in order for them to be performant in actual analysis or use.

10. Testing and Vetting Learners

Machine learners can then be applied to the various features and training sets produced by the system. Each learning application involves the testing of one or more learners; the varying of input feature or training sets; and the testing of various processing thresholds and parameters (including possibly white and black lists). This set of requirements is one of the most intensive on this listing, and definitely requires documentation of test results, alternatives tested, and other observations useful to cost-effective application.

Rinse and Repeat

Each of these 10 steps is not a static event. Rather, given the constant change inherent to knowledge sources, the entire workflow must be repeated on a periodic basis. In order to reduce the tension between updating effort and current accuracy, the greater automation of steps with complete documentation is essential. A lack of automation leads to outdated systems because of the effort and delays in updates. The imperative for automation, then, is a function of the change frequency in the input KBs.

KBAI, perhaps at the pinnacle of knowledge management services, requires more of these steps and perhaps more frequent updates. But any knowledge management activity will incur a portion of these management requirements.

Yes, Literate Programming is Worth It

As I stated in a prior article in this series [4], “The only sane way to tackle knowledge bases at these structural levels is to seek consistent design patterns that are easier to test, maintain and update. Open world systems must embrace repeatable and largely automated workflow processes, plus a commitment to timely updates, to deal with the constant, underlying change in knowledge.” Literate programming is, we have come to believe, one of the integral ways to keep sane.

The effort to adopt literate programming is justified. But, as Fred noted in one of his recent posts, literate programming does impose a cost on teams and requires some cultural and mindset changes. However, in the context of KBAI, these are not simply nice-to-haves, they are imperatives.

Choice of tools and systems thus becomes important in supporting a literate programming environment. As Fred has noted, he has chosen Org-mode for Structured Dynamics’ literate programming efforts. Besides Org-mode, that also (generally) requires adoption by programmers of the Emacs editor. Both of these tools are a bit problematic for non-programmers.

Though no literate programming tools yet support WOPE (write once, publish everywhere), they can make much progress toward that goal. By “weaving” we can create standalone documentation. With the converter tool Pandoc, we can make (mostly) accurate translations of documents in dozens of formats against one another. The system is open and can be extended. Pandoc works best with lightweight markup formats like Org-mode, Markdown, wikitext, Textile, and others.

We’re still working hard on the tooling infrastructure surrounding literate programming. We like the interactive notebooks approach, and also want easy and straightforward ways to deploy code snippets, demos and interactive Web pages.

Because of the factors outlined in this article, we see a renewed emphasis on literate programming. That, combined with the Web and its continued innovations, would appear to point to a future rich in responsive tooling and workflows geared to the knowledge economy.

[1] Other known open source electronic lab notebook options include

Beaker Notebook,

Flow,

nteract,

OpenWetWare,

Pineapple,

Rodeo,

RStudio,

SageMathCloud,

Session,

Shiny,

Spark Notebook, and

Spyder, among others certainly missed. Terminology for these apps includes notebook, electronic lab notebook, data notebook, and data scientist notebook.

[2] See M. K. Bergman, 2009. “

The Open World Assumption: Elephant in the Room,”

AI3:::Adaptive Information blog, December 21, 2009. The open world assumption (OWA) generally asserts that the lack of a given assertion or fact being available does not imply whether that possible assertion is true or false: it simply is not known. In other words, lack of knowledge does not imply falsity. Another way to say it is that everything is permitted until it is prohibited. OWA lends itself to incremental and incomplete approaches to various modeling problems.

OWA is a formal logic assumption that the truth-value of a statement is independent of whether or not it is known by any single observer or agent to be true. OWA is used in knowledge representation to codify the informal notion that in general no single agent or observer has complete knowledge, and therefore cannot make the closed world assumption. The OWA limits the kinds of inference and deductions an agent can make to those that follow from statements that are known to the agent to be true. OWA is useful when we represent knowledge within a system as we discover it, and where we cannot guarantee that we have discovered or will discover complete information. In the OWA, statements about knowledge that are not included in or inferred from the knowledge explicitly recorded in the system may be considered unknown, rather than wrong or false. Semantic Web languages such as OWL make the open world assumption.

Discusses the Role of Order and Complexity in Knowledge Systems

Discusses the Role of Order and Complexity in Knowledge Systems

Constant Change Makes Adopting New Practices and Learning New Tools Essential

Constant Change Makes Adopting New Practices and Learning New Tools Essential Long-lost Global Warming Paper is Still Pretty Good

Long-lost Global Warming Paper is Still Pretty Good