Builds Are a More Complicated Workflow than Extractions

In installment CWPK #37 to this Cooking with Python and KBpedia series, we looked at roundtripping information flows and their relation to versions and broad directory structure. From a strict order-of-execution basis, one of the first steps in the build process is to check and vet the input files to make sure they are clean and conformant. Let’s keep that thought in mind, and it is a topic we will address in CWPK #45. But we are going to skip over that topic for now so that we do not add complexity to figuring out the basis of what we need from a backbone standpoint of a build.

By “build” I have been using a single term to skirt around a number of issues (or options). We can “build” our system from scratch at all times, which is the simplest conceptual one in that we go from A to Z, processing every necessary point in between. But, sometimes a “build” is not a wholesale replacement, but an incremental one. It might be incremental because we are simply adding, say, a single new typology or doing other interim updates. The routines or methods we need to write should accommodate these real-world use cases.

We also have the question of vetting our builds to ensure they are syntactically and logically coherent. The actual process of completing a vetted, ‘successful’ build may require multiple iterations and successively more refined tweaks to the input specifications in order to get our knowledge graphs to a point of ‘acceptance’. These last iterating steps of successive refinements all follow the same “build” steps, but ones which involve fewer and fewer fixes until ‘acceptance’ when the knowledge graph is deemed ready for public release. In my own experience following these general build steps over nearly a decade now, there may be tens or more “builds” necessary to bring a new version to public release. These steps may not be needed in your own circumstance, but a useful generic build process should anticipate them.

Further, as we explore these issues in some depth, we will find weaknesses or problems in our earlier extraction flows. We still want to find generic patterns and routines, but as we add these ones related to “builds” that will also cause some reachback into the assumptions and approaches we earlier used for extractions (CWPK #29 to CWPK #35). This installment thus begins with input-output (I/O) considerations from this “build” perspective.

Basic I/O Considerations

It seems like I spend much time in this series on code and directory architecting. I’m sure one reason is that it is easier for me to write than code! But, seriously, it is also the case that thinking through use cases or trying to capture prior workflows is the best way I know to write responsive code. So, in the case of our first leg regarding extraction, we had a fairly simple task: we had information in our knowledge graphs that we needed to get out in a usable file format for external applications (and roundtripping). If our file names and directory locations were not exactly correct, no big deal: We can easily manipulate these flat-text files and move them to other places as needed.

That is not so with the builds. First, we can only ingest our external files used in a build that can be read and “understood” by our knowledge graph. Second, as I touched on above, sometimes these builds may be total (or ‘full’ or ‘start’), sometimes they may be incremental (or ‘fix’). We want generic input-and-output routines to reflect these differences.

In a ‘full’ build scenario, we need to start with a core, bootstrap, skeletal ontology to which we add concepts (classes) and predicates (properties) until the structure is complete (or nearly so), and then to add annotations to all of that input. In a ‘fix’ build scenario, we need to start with an already constructed ‘full’ graph, and then make modifications (updates, deletes, additions) to it. How much cleaning or logical testing we may do may vary by these steps. We also need to invoke and write files to differing locations to embrace these options.

To make this complexity a bit simpler, like we have done before, we will make a couple of simplifying choices. First, we will use a directory where all build input files reside, which we call build_ins. This directory is the location where we first put the files extracted from a prior version to be used as the starting basis for the new version (see Figure 2 in CWPK #37). It is also the directory where we place our starting ontology files, the stubs, that bootstrap the locations for new properties and classes to be added. We also place our fixes inputs into this directory.

Second, the result of our various build steps will generally be placed into a single sub-directory, the targets directory. This directory is the source for all completed builds used for analysis and extractions for external uses and new builds. It is also the source of the knowledge graph input when we are in an incremental update or ‘fix’ mode, since we desire to modify the current build in-progress, not always start from scratch. The targets directory is also the appropriate location for logging, statistics, and working ‘scratchpad’ subdirectories while we are working on a given build.

To this structure I also add a sandbox directory for experiments, etc., that do not fall within a conventional build paradigm. The sandbox material can either be total scratch or copied manually to other locations if there is some other value.

Please see Figure 2 in CWPK #37 to see the complete enumeration of these directory structures.

Basic I/O Routines

Similar to what we did with the extraction side of the roundtrip, we will begin our structural builds (and the annotation ones two installments hence) in the interactive format of Jupyter Notebook. We will be able to progress cell-by-cell Running these or invoking them with the shift+enter convention. After our cleaning routines in CWPK #45, we will then be able to embed these interactive routines into build and clean modules in CWPK #47 as part of the cowpoke package.

From the get-go with the build module we need to have a more flexible load routine for cowpoke that enables us to specify different sources and targets for the specific build, the inputs-outputs, or I/O. We had already discovered in the extraction routines that we needed to bring three ontologies into our project namespace, KKO, the reference concepts of KBpedia, and SKOS. We may also need to differentiate ‘start’ v ‘fix’ wrinkles in our builds. That leads to three different combinations of source and target: ‘standard’ (same as ‘fixes’), ‘start’, and our optional ‘sandbox’) for our basic “build” I/O:

from owlready2 import *

from cowpoke.config import *

# from cowpoke.__main__ import *

import csv # we import all modules used in subsequent steps

world = World()

kko = []

kb = []

rc = []

core = []

skos = []

kb_src = build_deck.get('kb_src') # we get the build setting from config.py

if kb_src is None:

kb_src = 'standard'

if kb_src == 'sandbox':

kbpedia = 'C:/1-PythonProjects/kbpedia/sandbox/kbpedia_reference_concepts.owl'

kko_file = 'C:/1-PythonProjects/kbpedia/sandbox/kko.owl'

elif kb_src == 'standard':

kbpedia = 'C:/1-PythonProjects/kbpedia/v300/targets/ontologies/kbpedia_reference_concepts.owl'

kko_file = 'C:/1-PythonProjects/kbpedia/v300/build_ins/stubs/kko.owl'

elif kb_src == 'start':

kbpedia = 'C:/1-PythonProjects/kbpedia/v300/build_ins/stubs/kbpedia_rc_stub.owl'

kko_file = 'C:/1-PythonProjects/kbpedia/v300/build_ins/stubs/kko.owl'

else:

print('You have entered an inaccurate source parameter for the build.')

skos_file = 'http://www.w3.org/2004/02/skos/core'

(NOTE: We later add an ‘extract’ option to the above to integrate this with our earlier extraction routines.)

As I covered in CWPK #21, two tricky areas in this project are related to scope. The first tricky area relates to the internal Python scope of LEGB, which stands for local → enclosed → global → built-in and means that objects declared on the right are available to the left, but not left to right for the arrows shown. Care is thus needed about how information gets passed between Python program components. So, yes, a bit of that trickiness is in play with this installment, but the broader issues pertain to the second tricky area.

The second area is the interplay of imports, ontologies, and namespaces within owlready2, plus its own internal ‘world’ namespace.

I have struggled to get these distinctions right, and I’m still not sure I have all of the nuances down or correct. But, here are some things I have learned in cowpoke.

First, when loading an ontology, I give it a ‘world’ namespace assigned to the ‘World’ internal global namespace for owlready2. Since I am only doing cowpoke-related development in Python at a given time, I can afford to claim the entire space and perhaps lessen other naming problems. Maybe this is superfluous, but I have found it to be a recipe that works for me.

Second, when one imports an ontology into the working ontology (declaring the working ontology being step one), all ontologies available to the import are available to the working ontology. However, if one wants to modify or add items to these imported ontologies, each one needs to be explicity declared, as is done for skos and kko in our current effort.

Third, it is essential to declare the namespaces for these imports under the current working ontology. Then, from that point forward, it is also essential to be cognizant that these separate namespaces need to be addressed explicitly. In the case of cowpoke and KBpedia, for example, we have classes from our governing upper ontology, KKO (also with namespace ‘kko‘) and the reference concepts of the full KBpedia (namespace ‘rc‘). More than one namespace in the working ontology does complicate matters quite a bit, but that is also the more realistic architecture and design approach. Part of the nature of semantic technologies is to promote interoperability among multiple knowledge graphs or ontologies, each of which will have at least one of its own namespaces. To do meaningful work across ontologies, it is important to understand these ontology ← → namespace distinctions.

This is how these assignments needed to work out for our build routines based on these considerations:

kb = world.get_ontology(kbpedia).load()

rc = kb.get_namespace('http://kbpedia.org/kko/rc/') # need to make sure we set the namespace

skos = world.get_ontology(skos_file).load()

kb.imported_ontologies.append(skos)

core = world.get_namespace('http://www.w3.org/2004/02/skos/core#')

kko = world.get_ontology(kko_file).load()

kb.imported_ontologies.append(kko)

kko = kb.get_namespace('http://kbpedia.org/ontologies/kko#') # need to assign namespace to main onto ('kb')Now that we have set up our initial build switches and defined our ontologies and related namespaces, we are ready to construct the code for our first build attempt. In this instance, we will be working with only a single class structure input file to the build, typol_AudioInfo.csv, which according to our ‘start’ build switch (see above) is found in the kbpedia/v300/build_ins/typologies/ directory under our project location.

The routine below needs to go through three different passes (at least as I have naively specified it!), and is fairly complicated. There are quite a few notes below the code listing explaining some of these steps. Also note we will be definining this code block as a function and the import types statement will be moved to the header in our eventual build module:

import types

src_file = 'C:/1-PythonProjects/kbpedia/v300/build_ins/typologies/typol_AudioInfo.csv'

kko_list = typol_dict.values()

with open(src_file, 'r', encoding='utf8') as csv_file: # Note 1

is_first_row = True

reader = csv.DictReader(csv_file, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

for row in reader: ## Note 2: Pass 1: register class

id = row['id'] # Note 3

parent = row['parent'] # Note 3

id = id.replace('http://kbpedia.org/kko/rc/', 'rc.') # Note 4

id = id.replace('http://kbpedia.org/ontologies/kko#', 'kko.')

id_frag = id.replace('rc.', '')

id_frag = id_frag.replace('kko.', '')

parent = parent.replace('http://kbpedia.org/kko/rc/', 'rc.')

parent = parent.replace('http://kbpedia.org/ontologies/kko#', 'kko.')

parent = parent.replace('owl:', 'owl.')

parent_frag = parent.replace('rc.', '')

parent_frag = parent_frag.replace('kko.', '')

parent_frag = parent_frag.replace('owl.', '')

if is_first_row: # Note 5

is_first_row = False

continue

with rc: # Note 6

kko_id = None

kko_frag = None

if parent_frag == 'Thing': # Note 7

if id in kko_list: # Note 8

kko_id = id

kko_frag = id_frag

else:

id = types.new_class(id_frag, (Thing,)) # Note 6

if kko_id != None: # Note 8

with kko: # same form as Note 6

kko_id = types.new_class(kko_frag, (Thing,))

with open(src_file, 'r', encoding='utf8') as csv_file:

is_first_row = True

reader = csv.DictReader(csv_file, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

for row in reader: ## Note 2: Pass 2: assign parent

id = row['id']

parent = row['parent']

id = id.replace('http://kbpedia.org/kko/rc/', 'rc.') # Note 4

id = id.replace('http://kbpedia.org/ontologies/kko#', 'kko.')

id_frag = id.replace('rc.', '')

id_frag = id_frag.replace('kko.', '')

parent = parent.replace('http://kbpedia.org/kko/rc/', 'rc.')

parent = parent.replace('http://kbpedia.org/ontologies/kko#', 'kko.')

parent = parent.replace('owl:', 'owl.')

parent_frag = parent.replace('rc.', '')

parent_frag = parent_frag.replace('kko.', '')

parent_frag = parent_frag.replace('owl.', '')

if is_first_row:

is_first_row = False

continue

with rc:

kko_id = None # Note 9

kko_frag = None

kko_parent = None

kko_parent_frag = None

if parent_frag is not 'Thing': # Note 10

if parent in kko_list:

kko_id = id

kko_frag = id_frag

kko_parent = parent

kko_parent_frag = parent_frag

else:

var1 = getattr(rc, id_frag) # Note 11

var2 = getattr(rc, parent_frag)

if var2 == None: # Note 12

continue

else:

var1.is_a.append(var2) # Note 13

if kko_parent != None: # Note 14

with kko:

if kko_id in kko_list: # Note 15

continue

else:

var1 = getattr(rc, kko_frag) # Note 16

var2 = getattr(kko, kko_parent_frag)

var1.is_a.append(var2)

thing_list = [] # Note 17

with open(src_file, 'r', encoding='utf8') as csv_file:

is_first_row = True

reader = csv.DictReader(csv_file, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

for row in reader: ## Note 2: Pass 3: remove owl.Thing

id = row['id']

parent = row['parent']

id = id.replace('http://kbpedia.org/kko/rc/', 'rc.') # Note 4

id = id.replace('http://kbpedia.org/ontologies/kko#', 'kko.')

id_frag = id.replace('rc.', '')

id_frag = id_frag.replace('kko.', '')

parent = parent.replace('http://kbpedia.org/kko/rc/', 'rc.')

parent = parent.replace('http://kbpedia.org/ontologies/kko#', 'kko.')

parent = parent.replace('owl:', 'owl.')

parent_frag = parent.replace('rc.', '')

parent_frag = parent_frag.replace('kko.', '')

parent_frag = parent_frag.replace('owl.', '')

if is_first_row:

is_first_row = False

continue

if parent_frag == 'Thing': # Note 18

if id in thing_list: # Note 17

continue

else:

if id in kko_list: # Note 19

var1 = getattr(kko, id_frag)

thing_list.append(id)

else: # Note 19

var1 = getattr(rc, id_frag)

var1.is_a.remove(owl.Thing)

thing_list.append(id)The code block above was the most challenging to date in this CWPK series. Some of the lessons from working this out are offered in CWPK #21. Here are the notes that correspond to some of the statements made in the code above:

-

This is a fairly standard CSV processing routine. However, note the ‘fieldnames’ that are assigned, which give us a basis as the routine proceeds to pick out individual column values by row

-

Each file processed requires three passes: Pass #1 – registers each new item in the source file as a bona fide

owl:Class; Pass #2 – each new item, now properly registered to the system, is assigned its parent class; and Pass #3 – each of the new items has its direct assignment toowl:Classremoved to provide a cleaner hierarchy layout -

We are assigning each row value to a local variable for processing during the loop

-

In this, and in the lines to follow, we are reducing the class string and its parent string from potentially its full IRI string to prefix + Name. This gives us the flexibility to have different format input files. We will eventually pull this repeated code each loop out into its own function

-

This is a standard approach in CSV file processing to skip the first header row in the file

-

There are a few methods apparently possible in owlready2 for assigning a class, but this form of looping over the ontology using the ‘

rc‘ namespace is the only version I was able to get to work successfully, with the assignment statement as shown in the second part of this method. Note the assignment to ‘Thing’ is in the form of a tuple, which is why there is a trailing comma -

Via this check, we only pick up the initial class declarations in our input file, and skip over all of the others that set actual direct parents (which we deal with in Pass #2)

-

We check all of our input roles to see if the row class is already in our kko dictionary (kko_list, set above the routine) or not. If it is a

kko.Class, we assign the row information to a new variable, which we then process outside of the ‘rc’ loop so as to not get the namespaces confused -

Initializing all of this loops variables to ‘None’

-

Same processing checks as for Pass #1, except now we are checking on the parent values

-

This is an owlready2 tip, and a critical one, for getting a class type value from a string input; without this, the class assignment method (Note 13) fails

-

If var2 is not in the ‘

rc‘ namespace (in other words, it is in ‘kko‘, we skip the parent assignment in the ‘rc‘ loop -

This is another owlready2 method for assigning a class to a parent class. In this loop given the checks performed, both parent and id are in the ‘

rc‘ namespace -

As for Pass #1, we are now processing the ‘

kko‘ namespace items outside of the ‘rc‘ namespace and in its own ‘kko‘ namespace -

We earlier picked up rows with parents in the ‘

kko‘ namespace; via this call, we also exclude rows with a ‘kko‘ id as well, since our imported KKO ontology already has all kko class assignments set -

We use the same parent class assignment method as in Note #11, but now for ids in the ‘

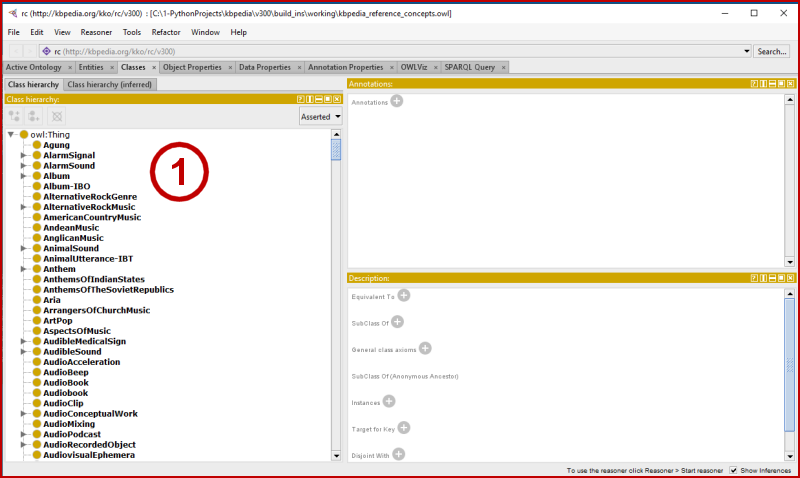

rc‘ namespace and parents in the ‘kko‘ namespace. However, the routine so far also results in a long listing of classes directly underowl:Thingroot (1) in an ontology editor such as Protégé:

-

We use a ‘thing_list’, and assign it as an empty set at the beginning of the Pass #3 routine, because we will be deleting class assignments to

owl:Thing. There may be multiple declarations in our build file, but we only may delete the assignment once from the knowledge base. The lookup to ‘thing_list’ prevents us from erroring when trying to delete for a second or more times -

We are selecting on ‘Thing’ because we want to unassign all of the temporary

owl:Thingclass assignments needed to provide placeholders in Pass #1 (Note: recall in our structure extractor routines in CWPK #28 we added an extra assignment to add anowl:Thingclass definition so that all classes in the extracted files could be recognized and loaded by external ontology editors) -

We differentiate between ‘

rc‘ and ‘kko‘ concepts because the kko are defined separated in the KKO ontology, used as one of our build stubs.

As you run this routine in real time from Jupyter Notebook, you can inspect what have been removed by inspecting:

list(thing_list)We can now inspect this loading of an individual typology into our stub. We need to preface our ‘save’ statement with the ‘kb’ ontology identifier. I also have chosen to use the ‘working’ directory for saving these temporary results:

kb.save(file=r'C:/1-PythonProjects/kbpedia/v300/build_ins/working/kbpedia_reference_concepts.owl', format="rdfxml") So, phew! After much time and trial, I was able to get this code running successfully! Here is the output of the full routine:

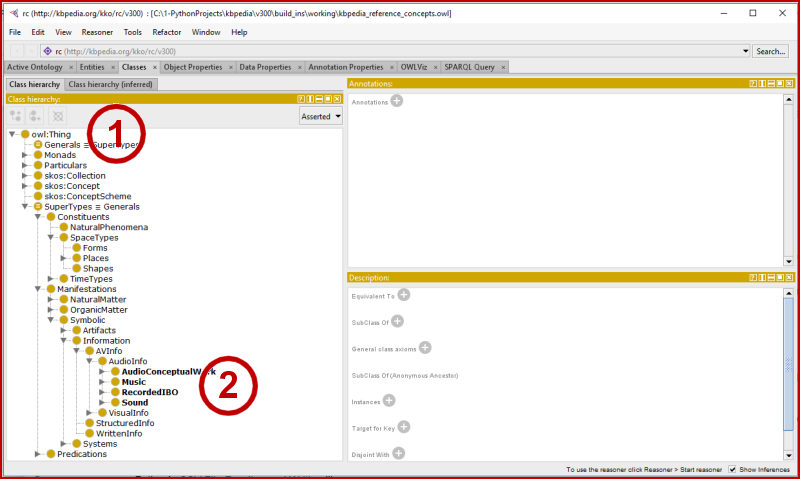

We can see that our flat listing under the root is now gone (1) and all concepts are properly organized according to the proper structure in the KKO hierarchy (2).

We now have a template for looping over multiple typologies to contribute to a build as well as to bring in KBpedia’s property structure. These are the topics of our next installment.

Additional Documentation

There were dozens of small issues and problems that arose in working out the routine above. Here are some resources that were especially helpful in informing that effort:

- The owlready2 mailing list is an increasingly helpful resource for specific questions. With regard to the above, searches on ‘namespace’ and ‘import’ were helpful

- CSV file Import with DictReader

- Traps for the Unwary in Python’s Import System

- DataCamp’s Scope of Variables in Python

- Python’s CSV File Reading and Writing library.

*.ipynb file. It may take a bit of time for the interactive option to load.

In the large code block above, it would work for me straight so I had to change the second to last line in the code to if owl.Thing in var1.is_a: var_1.is_a.remove(owl.Thing)

Just thought you would like to know.