These are Two Recommended Utilities

This installment in our Cooking with Python and KBpedia series covers two useful (essential?) utilities for any substantial project: stats and logging. stats refers to internal program or knowledge graph metrics, not a generalized statistical analysis package. logging is a longstanding Python module that provides persistence and superior control over using simple print statements for program tracing and debugging.

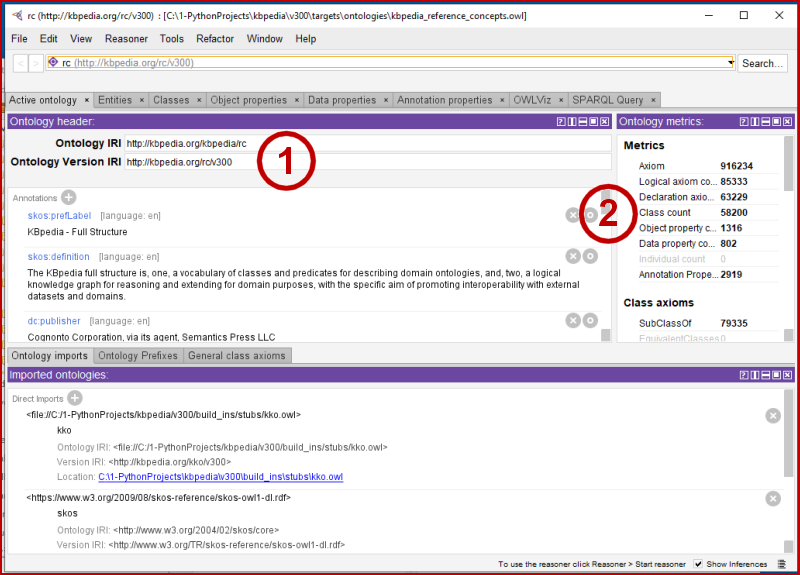

On the stats side, we will emphasize capturing metrics not already available when using Protégé, which provides its own set of useful baseline statistics. (See Figure 1.) These metrics are mostly simple counts, with some sums and averages. The results of these metrics are some of the numerical data points that we will use in the next installment on charting.

On the logging front, we will edit all of our existing routines to log to file, as well as print to screen. We can embed these routines in existing functions so that we may better track our efforts.

An Internal Stats Module

In our earlier extract-and-build routines we have already put in place the basic file and set processing steps necessary to capture additional metrics. We will add to these here, in the process creating an internal stats module in our cowpoke package.

First, there is no need to duplicate the information that already comes to us when using Protégé. Here are the standard stats provided on the main start-up screen:

We are loading up here (1) our KBpedia v 300 in-progress. We can see that Protégé gives us counts (2) of classes (58200), object properties (1316), data properties (802), annotation properties (2919), and a few other metrics.

We will take these values as givens, and will enter them as part of the initialization for our own internal procedures (for checking totals and calculating percentages).

Pyflakes is a simple Python code checker that you may want to consider. If you want to add in stylistic checks, you want flake8, which combines Pyflakes with style checks against PEP 8 or pycodestyle. Pylint is another static code style checker.

from cowpoke.__main__ import *

from cowpoke.config import *### KEY CONFIG SETTINGS (see build_deck in config.py) ###

# 'kb_src' : 'standard'

# count : 14 # Note 1

# out_file : 'C:/1-PythonProjects/kbpedia/v300/targets/stats/kko_typol_stats.csv'

from itertools import combinations # Note 2

def typol_stats(**build_deck):

kko_list = typol_dict.values()

count = build_deck.get('count')

out_file = build_deck.get('out_file')

with open(out_file, 'w', encoding='utf8') as output:

print('count,size_1,kko_1,size_2,kko_2,intersect RCs', file=output)

for i in combinations(kko_list,2):

kko_1 = i[0]

kko_2 = i[1]

kko_1_frag = kko_1.replace('kko.', '')

kko_1 = getattr(kko, kko_1_frag)

print(kko_1_frag)

kko_2_frag = kko_2.replace('kko.', '')

kko_2 = getattr(kko, kko_2_frag)

descent_1 = kko_1.descendants(include_self = False)

descent_1 = set(descent_1)

size_1 = len(descent_1)

descent_2 = kko_2.descendants(include_self = False)

descent_2 = set(descent_2)

size_2 = len(descent_2)

intersect = descent_1.intersection(descent_2)

num = len(intersect)

if num <= count:

print(num, size_1, kko_1, size_2, kko_2, intersect, sep=',', file=output)

else:

print(num, size_1, kko_1, size_2, kko_2, sep=',', file=output)

print('KKO typology intersection analysis is done.')typol_stats(**build_deck)The procedure above takes a few minutes to run. You can inspect what the routine produces at C:/1-PythonProjects/kbpedia/v300/targets/stats/kko_typol_stats.csv.

We can also get summary statistics from the knowledge graph using the rdflib package. Here is a modification of one of the library’s routine to obtain some VoID statistics:

import collections

from rdflib import URIRef, Graph, Literal

from rdflib.namespace import VOID, RDF

graph = world.as_rdflib_graph()

g = graph

def generate2VoID(g, dataset=None, res=None, distinctForPartitions=True):

"""

Returns a VoID description of the passed dataset

For more info on Vocabulary of Interlinked Datasets (VoID), see:

http://vocab.deri.ie/void

This only makes two passes through the triples (once to detect the types

of things)

The tradeoff is that lots of temporary structures are built up in memory

meaning lots of memory may be consumed :)

distinctSubjects/objects are tracked for each class/propertyPartition

this requires more memory again

"""

typeMap = collections.defaultdict(set)

classes = collections.defaultdict(set)

for e, c in g.subject_objects(RDF.type):

classes[c].add(e)

typeMap[e].add(c)

triples = 0

subjects = set()

objects = set()

properties = set()

classCount = collections.defaultdict(int)

propCount = collections.defaultdict(int)

classProps = collections.defaultdict(set)

classObjects = collections.defaultdict(set)

propSubjects = collections.defaultdict(set)

propObjects = collections.defaultdict(set)

num_classObjects = 0

num_propSubjects = 0

num_propObjects = 0

for s, p, o in g:

triples += 1

subjects.add(s)

properties.add(p)

objects.add(o)

# class partitions

if s in typeMap:

for c in typeMap[s]:

classCount[c] += 1

if distinctForPartitions:

classObjects[c].add(o)

classProps[c].add(p)

# property partitions

propCount[p] += 1

if distinctForPartitions:

propObjects[p].add(o)

propSubjects[p].add(s)

if not dataset:

dataset = URIRef('http://kbpedia.org/kko/rc/')

if not res:

res = Graph()

res.add((dataset, RDF.type, VOID.Dataset))

# basic stats

res.add((dataset, VOID.triples, Literal(triples)))

res.add((dataset, VOID.classes, Literal(len(classes))))

res.add((dataset, VOID.distinctObjects, Literal(len(objects))))

res.add((dataset, VOID.distinctSubjects, Literal(len(subjects))))

res.add((dataset, VOID.properties, Literal(len(properties))))

for i, c in enumerate(classes):

part = URIRef(dataset + "_class%d" % i)

res.add((dataset, VOID.classPartition, part))

res.add((part, RDF.type, VOID.Dataset))

res.add((part, VOID.triples, Literal(classCount[c])))

res.add((part, VOID.classes, Literal(1)))

res.add((part, VOID["class"], c))

res.add((part, VOID.entities, Literal(len(classes[c]))))

res.add((part, VOID.distinctSubjects, Literal(len(classes[c]))))

if distinctForPartitions:

res.add(

(part, VOID.properties, Literal(len(classProps[c]))))

res.add((part, VOID.distinctObjects,

Literal(len(classObjects[c]))))

num_classObjects = num_classObjects + len(classObjects[c])

for i, p in enumerate(properties):

part = URIRef(dataset + "_property%d" % i)

res.add((dataset, VOID.propertyPartition, part))

res.add((part, RDF.type, VOID.Dataset))

res.add((part, VOID.triples, Literal(propCount[p])))

res.add((part, VOID.properties, Literal(1)))

res.add((part, VOID.property, p))

if distinctForPartitions:

entities = 0

propClasses = set()

for s in propSubjects[p]:

if s in typeMap:

entities += 1

for c in typeMap[s]:

propClasses.add(c)

res.add((part, VOID.entities, Literal(entities)))

res.add((part, VOID.classes, Literal(len(propClasses))))

res.add((part, VOID.distinctSubjects,

Literal(len(propSubjects[p]))))

res.add((part, VOID.distinctObjects,

Literal(len(propObjects[p]))))

num_propSubjects = num_propSubjects + len(propSubjects[p])

num_propObjects = num_propObjects + len(propObjects[p])

print('triples:', triples)

print('subjects:', len(subjects))

print('objects:', len(objects))

print('classObjects:', num_classObjects)

print('propObjects:', num_propObjects)

print('propSubjects:', num_propSubjects)

return res, datasetgenerate2VoID(g, dataset=None, res=None, distinctForPartitions=True)triples: 1662129

subjects: 213395

objects: 698372

classObjects: 850446

propObjects: 858445

propSubjects: 1268005

(<Graph identifier=Na47c69e2f7b84d9b911c46e2cdf0fe11 (<class 'rdflib.graph.Graph'>)>,

rdflib.term.URIRef('http://kbpedia.org/kko/rc/'))These metrics can go into the pot with the summary statistics we also gain from Protégé. We’ll see some graphic reports on these numbers in the next installment.

Logging

I think an honest appraisal may straddle the fence about whether logging makes sense for the cowpoke package. On the one hand, we have begun to assemble a fair degree of code within the package, that perhaps would normally trigger the advisability of logging. On the other hand, we run the various scripts only sporadically, and in pieces when we do. There is not a continuous production function under what we have done, so far.

If we were to introduce this code into a production setting or get multiple developers involved, I would definitely argue for the need for logging. Consider what we have in the current cowpoke code base as the transition condition for looking at this question. However, since logging is good practice, and we are close, let’s go ahead and invoke the capability nonetheless.

One chooses logging over the initial print statements because we gain these benefits:

- The ability to time stamp our logging messages

- The ability to keep our logging messages persistent

- We can generate messages constantly in the background for later inspection, and

- We can better organize our logging messages.

The logging module that comes with Python is quite mature and has further advantages:

- We can control the warning level of the messages and what warning levels trigger logging

- We can format the messages as we wish, and

- We can send our messages to file, screen, or socket.

By default, the Python logging module has five pre-set warning levels:

- debug – detailed information, typically of interest only when diagnosing problems

- info – confirmation that things are working as expected

- warning – an indication that something unexpected happened, or indicative of some problem in the near future (e.g. ‘disk space low’). The software is still working as expected

- error – due to a more serious problem, the software has not been able to perform some function, or

- critical – a serious error, indicating that the program itself may be unable to continue running.

We’ll see in the following steps how we can configure the logger and set it up for working with our existing functions.

Configuration

Logging is organized as a tree, with the root being the system level. For a single package, it is best to set up a separate main logging branch under the root so that warnings and loggings can be treated consistently throughout the package. This design, for example, allows warning messages and logging levels to be set with a single call across the entire package (sub-branches may have their own conditions). This is what is called adding a ‘custom’ logger to your system.

Configurations may be set in Python code (the method we will use, because it is the simplest) or via a separate .ini file. Configuration settings include most of the specified items below.

Handlers

You can set up logging messages to go to console (screen) or file. In our examples below, we will do both.

Formatters

You can set up how your messages are formatted. We can also format console v file messages differently, as our examples below show.

Default Messages

Whenever we insert a logging message, beside setting severity level, we may also assign a message unique to that part of the code. However, if we choose not to assign a new, specific message, the message invoked will be the default one defined in our configuration.

Example Code

Since our set up is straightforward, we will put our configuration settings into our existing config.py file and write our logging messages to the log subdirectory. Here is how our set up looks (with some in-line commentary):

import logging

# Create a custom logger

logger = logging.getLogger(__name__) # Will invoke name of current module

# Create handlers

log_file = 'C:/1-PythonProjects/kbpedia/v300/targets/logs/kbpedia_logging.log'

#logging.basicConfig(filename=log_file,level=logging.DEBUG)

c_handler = logging.StreamHandler() # Separate c_ and f_ handlers

f_handler = logging.FileHandler(log_file)

c_handler.setLevel(logging.WARNING)

f_handler.setLevel(logging.DEBUG)

# Create formatters and add it to handlers # File logs include time stamp, console does not

c_format = logging.Formatter('%(name)s - %(levelname)s - %(message)s')

f_format = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

c_handler.setFormatter(c_format)

f_handler.setFormatter(f_format)

# Add handlers to the logger

logger.addHandler(c_handler)

logger.addHandler(f_handler)

logging.debug('This is a debug message.')

logging.info('This is an informational message.')

logging.warning('Warning! Something does not look right.')

logging.error('You have encountered an error.')

logging.critical('You have experienced a critical problem.')WARNING:root:Warning! Something does not look right.

ERROR:root:You have encountered an error.

CRITICAL:root:You have experienced a critical problem.

Make sure you have this statement at the top of all of your cowpoke files:

import logging

Then, as you write or update your routines, use the logging.severity() statement where you previously were using print. This will cause you to get messages to both console and file, at the severity threshold level set. It is that easy!

Additional Documentation

Here is some supporting documentation for today’s installment:

- VoID documentation from

rdflib - A nice step-by-step logging guide

- ‘How to’ manual for the Python

logging facilitymodule - RealPython’s intro guide to the

logger - Configuraton guide for the

loggerfrom The Hitchhiker’s Guide to Python.

*.ipynb file. It may take a bit of time for the interactive option to load.