It’s Taken Too Many Years to Re-visit the ‘Deep Web’ Analysis

It’s Taken Too Many Years to Re-visit the ‘Deep Web’ Analysis

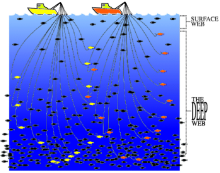

It’s been seven years since Thane Paulsen and I first coined the term ‘deep Web‘, perhaps representing a couple of full generational cycles for the Internet. What we knew then and what “Web surfers” did then has changed markedly. And, of course, our coining of the term and BrightPlanet’s publishing of the first quantitative study on the deep Web did nothing to create the phenomenon of dynamic content itself — we merely gave it a name and helped promote a bit of understanding within the general public of some powerful subterranean forces driving the nature and tectonics of the emerging Web.

The first public release of The Deep Web: Surfacing Hidden Value (courtesy of the Internet Archive’s Wayback Machine), in July 2000, opened with a bold claim:

BrightPlanet has uncovered the “deep” Web — a vast reservoir of Internet content that is 500 times larger than the known “surface” World Wide Web. What makes the discovery of the deep Web so significant is the quality of content found within. There are literally hundreds of billions of highly valuable documents hidden in searchable databases that cannot be retrieved by conventional search engines.

The day the study was released we needed to increase our servers nine-fold to meet news demand after CNN and then 300 major news outlets eventually picked up the story. By 2001 when the University of Michigan’s Journal of Electronic Publishing and its wonderful editor, Judith A. Turner, decided to give the topic renewed thrust, we were able to clean up the presentation and language quite a bit, but did little to actually update many of the statistics. (That version, in fact, is the one mostly cited today.)

Over the years there have been some books published and other estimates put forward, more often citing lower amounts in the deep Web than my original estimates, but, with one exception (see below), none of these were backed by new analysis. I was asked numerous times to update the study, and indeed had even begun collating new analysis at a couple of points, but the effort to complete the work was substantial and the effort always took a back seat to other duties and so was never completed.

Recent Updates and Criticisms

It was thus with some surprise and pleasure that I first found reference yesterday to Dirk Lewandowski’s and Phillip Mayr’s 2006 paper, “Exploring the Academic Invisible Web” [Library Hi Tech 24(4), 529-539], that takes direct aim at the analysis in my original paper. (Actually, they worked from the 2001 JEP version, but, as noted, the analysis is virtually identical to the original 2000 version.) The authors pretty soundly criticize some of the methodology in my original paper and, for the most part, I agree with them.

My original analysis combined a manual evaluation of the “top 60” then-extant Web databases with an estimate of the total number of searchable databases (estimated at about 200,000, which they incorrectly cite as 100,000) and assessments of the mean size of each database based on a random sampling of those databases. Lewandowski and Mayr note conceptual flaws in the analysis at these levels:

- First, by use of mean database size rather than median size, the size is overestimated,

- Second, databases of questionable content to their interests in academic content (such as weather records from NOAA or Earth survey data by satellite) skewed my estimates upward, and

- Third, my estimates were based on database size estimates (in GBs) and not internal record counts.

On the other hand, the authors also criticized that my definition of deep content was too narrow, and overlooked certain content types such as PDFs now routinely indexed and retrieved on the surface Web. We also have had uncertain, but tangible growth in standard search engine content — with the last cited amounts about 20 billion documents since Google and Yahoo! ceased their war of index numbers.

Though not really offering an alternative, full-blown analysis, the authors use the Gale Directory of Databases to derive an alternative estimate of perhaps 20 billion to 100 billion documents on the deep Web of interest for academic purposes, which they later seem to imply also needs to be discounted by further percentages to get at “word-oriented” and “full-text or bibliographic” records that they deem appropriate.

My Assessment of the Criticisms

As noted, I generally agree with these criticisms. For example, since the time of original publication, we have seen the power distribution nature of most things on the Internet, including popularity and traffic. Exponential distributions will always result in overestimates using calculations based on means rather than medians. I also think that meaningful content types were both overused (more database-like records) and underused (PDF content that is now routinely indexed) in my original analysis.

However, the authors’ third criticism is patently wrong, since three different methods were used to estimate internal database record counts and the average sizes of each record they contained. I would also have preferred a more careful reading by the authors of my actual paper, since there are numerous other citations in error and mis-characterizations.

On an epistemological level, I disagree with the authors’ use of the term “invisible Web”, a label that we tried hard in the paper to overturn and that is fading as a current term of art. Internet Tutorials (initially, SUNY at Albany Library) addresses this topic head-on, preferring “deep Web” on a number of compelling grounds, including that “there is no such thing as recorded information that is invisible. Some information may be more of a challenge to find than others, but this is not the same as invisibility.”

Finally, I am not compelled by the author’s simplistic, alternate partial estimate based solely on the Gale database, but they readily acknowledge to not doing a full-blown analysis and to having different objectives in mind. I agree with the authors in calling for a full, alternative analysis. I think we all agree that is a non-trivial undertaking and could itself be subject to newer methodological pitfalls.

So, What is the Quantitative Update?

Within a couple of years after the initial publication of my paper, I suspected the “500 times” claim for the greater size of the deep Web in comparison to what is discoverable by search engines may have been too high. Indeed, in later corporate literature and Powerpoint presentations, I backed off the initial 2000-2001 claims and began speaking in ranges from a “few times” to as high as “100 times” greater for the size of the deep Web.

In the last seven years, the only other quantitative study of its kind of which I am aware is documented in the paper, “Structured Databases on the Web: Observations and Implications,” conducted by Chang et al. in April 2004 and published in the ACM SIGMOD, that estimated 330,000 deep Web sources with over 1.2 million query forms, reflecting a fast 3-7 times increase in 4 years from the date of my original paper. Unlike the Lewandowski and Mayr partial analysis, this effort and others by that group suggests an even larger deep Web than my initial estimates!

The truth is, we didn’t know then — and we don’t know now — what the actual size of the dynamic Web truly is. (And, aside from a sound bite, does it really matter? It is huge by any measure.) Heroic efforts such as these quantitative analyses or the still-more ambitious efforts of UC Berkeley’s SIM School on How Much Information? still have a role in helping to bound our understanding of information overload. As long as such studies gain news traction, they will be pursued. So, what might today’s story look like?

First, the methodological problems in my original analysis remain and (I believe today) resulted in overestimates. Another factor today leading to a potential overestimate of the deep Web v. the surface Web would be the fact that much “deep” content is being more exposed to standard search engines, be it through Google’s Scholar, Yahoo!’s library relationships, individual site indexing and sharing such as through search appliances, and other “gray” factors we noted in our 2000-2001 studies. These factors, and certainly more, act to narrow the difference between exposed search engine content (“surface Web”) and what we have termed the “deep Web.”

However, countering these facts are two newer trends. First, foreign language content is growing at much higher rates and is often under-sampled. Second, blogs and other democratized sources of content are exploding. What these trends may be doing to content balances is, frankly, anyone’s guess.

So, while awareness of the qualitative nature of Web content has grown tremendously in the past near-decade, our quantitative understanding remains weak. Improvements in technology and harvesting can now overcome earlier limits.

Perhaps there is another Ph.D. candidate or three out there that may want to tackle this question in a better (and more definitive) way. According to Chang and Cho in their paper, “Accessing the Web: From Search to Integration,” presented at the 2006 ACM SIGMOD International Conference on Management of Data in Chicago:

On the other hand, for the deep Web, while the proliferation of structured sources has promised unlimited possibilities for more precise and aggregated access, it has also presented new challenges for realizing large scale and dynamic information integration. These issues are in essence related to data management, in a large scale, and thus present novel problems and interesting opportunities for our research community.

Who knows? For the right researcher with the right methodology, there may be a Science or Nature paper in prospect!

Nice post!

You mentioned that Dirk Lewandowski and Phillip Mayr in their work use “Gale Directory of Databases”. I had a look at the description of directory (at http://library.dialog.com/bluesheets/html/bl0230.html) and, shame on me, was not able to realize what it is and how one can access to it. Can you search somehow for something in this “Gale Directory of Databases”? Or perhaps there is maybe a DVD containing the “Gale Directory of Databases”? I mean, according to description it looks like they (Thompson) put all the directory’s content on the paper – so, in fact, it is just two paper books (two volumes) but in this case it is kind of useless …

Hi,

The Gale Directory of Databases provides detailed information on publicly available databases and database products accessible either online or through various media such as DVD, CD-ROM, magnetic tape, etc. Access to the directory requires a subscription; many universities and corporations have them, but to my knowledge there is no free, online access for general users or the non-subscribing public.

Thus, if you’re an academic, you can do the sorts of analysis that Lewandowski and Mayr did, but individuals without subscription access can not independently.

Hope this is helpful! If you’re real interested, see if a large local library can give you access.

Mike

A heroic effort to quantify a very complex phenomenon.

A separate but related issue is the rankings, how a reference to content on a particular site gets to the top of the list. Even if something interesting appears toward toward the top, how likely is it that someone has the patience to get through the 900 or so references listed?

Which raises the issue of Google faking us out about what we are seeing in the results … I’ve never been able to get past reference 900, nothing seems to exist beyond the first 900 references despite the 9,373,622 hits claimed by the engine.

For example, using the very general search term “financial investments” the Google engine reported 59,600,000 results. In this case, dividing the 900 visible results by the 59,600,000 total results, what I’m seeing on the Google results page is only about .0015% of everything purported to be in the index.

One should acknowledge the implicit assumption that each result is unique, rarely true of course. If .15% of the unique results are dispaly by Google, one is actually seeing is still only about .0015% of everything out there. By what ever method one computes it, the real ratio of references in surface web to the deep web is something like one in many thousands …

So, search engines have become a serious “knowledge bottleneck”, to use an old fashioned AI term. In fact, Google seems to be very aware it and is eager to be part of whatever solution emerges. One presumes this would be a large-scale collaborative effort of some sort, akin to a Wiki.

Actually, your list of “500 Semantic Web Tools” is probably as close as anything I could name to what the Semantic Web would do to break up the search engine logjam. In one version of a solution, many individual lists like your “500 SemWeb Tools” would be linkable by pre-defined semantic classifiers and tags. The function of the search engine would be to integrate across the many more or less static references compiled by many people on a given subject. Something like FOAF sharing of resources. Maybe …

Thanks for another interesting article.

– Bill

Great article.. As it seems Bill Breitmayer was right in his assessment that large-scale collaborative effort is needed to process the information not visible to the crawler. Without it is impossible to figure what type of information human beings are actually digging into. We can only guess and the estimate will suffer.

When I started Peer Belt, I did not even consider the deep Web and what it really mean to us all. The initial goal for Peer Belt was to help quality content publishers reach its audience despite somebody else’s aggressive search engine optimization. Along the way, it was discovered, Peer Belt’s approach lets us organize the information that matter most as individuals. After reading this post, I am convinced utilizing the humancontent interaction is to solve yet another problem.

As intuitive as is, user implicit actions, not artificial algorithms, indicate relevance! It is amazing no one has seen it.

Once again, great article, Mike, and comments, Bill!

-Krassi