In response to my review last week of OpenLink‘s offerings, Kingsley Idehen posted some really cool examples of what extracted RDF looks like. Kingsley used the content of my review to generate this structure; he also provided some further examples using DBpedia (which I have also recently discussed).

In response to my review last week of OpenLink‘s offerings, Kingsley Idehen posted some really cool examples of what extracted RDF looks like. Kingsley used the content of my review to generate this structure; he also provided some further examples using DBpedia (which I have also recently discussed).

What is really neat about these examples is how amazingly easy it is to create RDF, and the “value added” that results when you do so. Below, I again discuss structure and RDF (only this time more on why it is important), describe how to create it from standard Web content, and link to a couple of other recent efforts to structurize content.

Why is Structure Important?

The first generation of the Web, what some now refer to as Web 1.0, was document-centric. Resources or links most always referred to the single Web page (or document) that displayed in your browser. Or, stated another way, the basic, most atomic unit of organizing information was the document. Though today’s so-called Web 2.0 has added social collaboration and tagging, search and display still largely occurs by this document-centric mode.

Yet, of course, a document or Web page almost always refers to many entities or objects, often thousands, which are the real atomic units of information. If we could extract out these entities or objects — that is the structure within the document, or what one might envision as the individual Lego © bricks from which the document is constructed — then we could manipulate things at a more meaningful level, a more atomic and granular level. Entities now would become the basis of manipulation, not the more jumbled up hodge-podge of the broader documents. Some have called this more atomic level of object information the “Web of data,” others use “Web 3.0.” Either term is OK, I guess, but not sufficiently evocative in my opinion to explain why all of this stuff is important.

So, what does this entity structure give us, what is this thing I’ve been calling the structured Web?

First, let’s take simple search. One problem with conventional text indexing, the basis for all major search engines, is the ambiguity of words. For example, the term ‘driver‘ could refer to a printer driver, Big Bertha golf driver, NASCAR driver, a driver of screws, family car driver, a force for change, or other meanings. Entity extraction from a document can help disambiguate what “class” (often referred to as a “facet” when applied to search or browsing) is being discussed in the document (that is, its context) such that spurious search results can be removed. Extracted structure thus helps to filter unwanted search results.

Extracted entities may also enable narrowing search requests to say, documents only published in the last month or from certain locations or by certain authors or from certain blogs or publishers or journals. Such retrieval options are not features of most search engines. Extracted structure thus adds new dimensions to characterize candidate results.

Second, in the structured Web, the basis of information retrieval and manipulation becomes the entity. Assembling all of the relevant information — irrespective of the completeness or location of source content sites — becomes easy. In theory, with a single request, we could collate the entire corpus of information about an entity, say Albert Einstein, from life history to publications to Nobel prizes to who links to these or any other relationship. The six degrees of Kevin Bacon would become child’s play. But sadly today, knowledge workers spend too much of their time assembling such information from disparate sources, with notable incompleteness, imprecision and inefficiency.

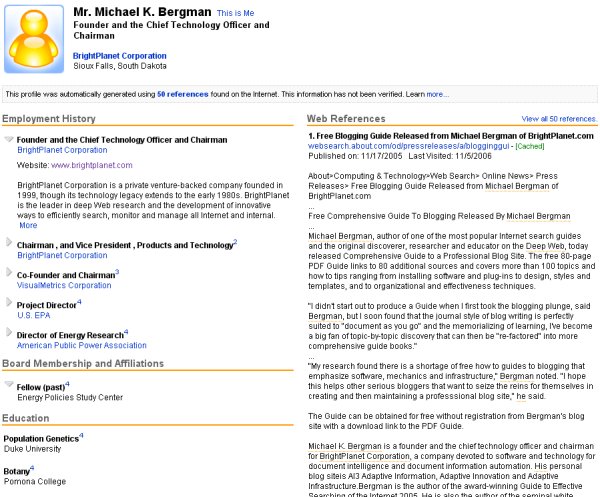

Third, the availability of such structured information makes for meaningful information display and presentation. One of the emerging exemplars of structured presentation (among others) is ZoomInfo. This, for example, is what a search on my name produces from ZoomInfo’s person search:

Granted, the listing is a bit out of date. But it is a structured view of what can be found in bits and pieces elsewhere about me, being built from about 50 contributing Web sources. And, the structure it provides in terms of name, title, employers, education, etc., is also more useful than a long page of results links with (mostly) unusable summary abstracts.

Presentations such as ZoomInfo’s will become common as we move to structured entity information on the Web as opposed to documents. And, we will see it for many more classes of entities beyond the categories of people, companies or jobs used by ZoomInfo. We are, for example, seeing such liftoff occurring in other categories of structured data within sources like DBpedia.

Fourth, we can get new types of mash-ups and data displays when this structure is combined, from calendars to tabular reports to timelines, maps, graphs of relatedness and topic clustering. We can also follow links and “explore” or “skate” this network of inter-relatedness, discovering new meanings and relationships.

And, fifth, where all of this is leading to is, of course, the semantic Web. We will be able to apply descriptive logic and draw inferences based on these relationships, resulting in the derivation of new information and connections not directly found in any of the atomic parts. However, note that much value still comes from the first areas of the structured Web alone, achievable immediately, short of this full-blown semantic Web vision.

OK, So What is this RDF Stuff Again?

As my earlier DBpedia review described, RDF — Resource Description Framework — is the data representation model at the heart of these trends. It uses a “triple” of subject-predicate-object, as generally defined by the W3C’s standard RDF model, to represent these informational entities or objects. In such triples, subject denotes the resource, and the predicate denotes traits or aspects of the resource and expresses a relationship between the subject and the object. (You can think of subjects and objects as nouns, predicates as verbs, and even think of the triples themselves as simple Dick-and-Jane sentences from a beginning reader.)

Resources are given a URI (as may also be given to predicates or objects that are not specified with a literal) so that there is a single, unique reference for each item. (OK, so here’s a tip: the length and complexity of the URIs themselves make these simple triple structures appear more complicated then they truly are! ‘Dick‘ seems much more complicated when it is expressed as http://www.dick-is-the-subject-of-this-discussion.com/identity/dickResolver/OpenID.xml.)

These URI lookups can themselves be an individual assertion, an entire specification (as is the case, for example, when referencing the RDF or XML standards), or a complete or partial ontology for some domain or world-view. While the RDF data is often stored and displayed using XML syntax, that is not a requirement. Other RDF forms may include N3 or Turtle syntax, and variants or more schematic representations of RDF also exist.

Here are some sample statements (among a few hundred generated, see later) from my reference blog piece on OpenLink that illustrate RDF triples:

| Subject | Predicate | Object |

| https://www.mkbergman.com/?p=355 | http://purl.org/dc/terms/created | 2007-04-16T19:33:50Z |

| https://www.mkbergman.com/?p=355 | http://rdfs.org/sioc/ns#link | https://www.mkbergman.com/?p=355 |

| https://www.mkbergman.com/?p=355 | http://purl.org/dc/elements/1.1/title | OpenLink Plugs the Gaps in the Structured Web |

| https://www.mkbergman.com/?p=355 | http://rdfs.org/sioc/ns#topic | https://www.mkbergman.com/?cat=2 |

| http://en.wikipedia.org/wiki/SIOC | http://www.w3.org/2000/01/rdf-schema#label | SIOC |

The first four items have the post itself as the subject. The last statement is an entity referenced within my subject blog post. In all cases, the specific subjects of the triple statements are resources.

In all statements, the predicates point to reference URIs that precisely define the schema or controlled vocabularies used in that triple statement. For readability, such links are sometimes aliased, such as created at (time), links to, has title, is within topic, and has label, respectively, for the five example instances. These predicates form the edges or connecting lines between nodes in the conceptual RDF graph.

Lastly, note that the object, the other node in the triple besides the subject, may be either a URI reference or a literal. Depending on the literal type, the material can be full-text indexed (one triple, for example, may point to the entire text of the blog posting, while others point to each post image) or can be used to mash-up or display information in different display formats (such as calendars or timelines for date/time data or maps where the data refer to geo-coordinates).

[Depending on provenance, source format, use of aliases, or other changes to make the display of triples more readable, it may at times be necessary to “dereference” what is displayed to obtain the URI values to trace or navigate the actual triple linkages. Deferencing in this case means translating the displayed portion (the “reference”) of a triple to its actual value and storage location, which means providing its linkable URI value. Note that literals are already actual values and thus not “dereferenced”.]

The absolutely great thing about RDF is how well it lends itself through subsequent logic (not further discussed here) to map and mediate concepts from different sources into an unambiguous semantic representation [my ‘glad‘ == (is the same as) your ‘happy‘ OR my ‘glad‘ is your ‘glad‘]. Further, with additional structure (such as through RDF-S or the various dialects of OWL), drawing inferences and machine reasoning based on the data through more formal ontologies and descriptive logics is also possible.

How is This Structure Extracted?

The structure extraction necessary to construct a RDF “triple” is thus pivotal, and may require multiple steps. Depending on the nature of the starting content and the participation or not of the site publisher, there is a range of approaches.

Generally, the highest quality and richest structure occurs when the site publisher provides it. This can be done either through various APIs with a variety of data export formats, in which case various converters or translators to canonical RDF may be required by the consumers of that data, or in the direct provision of RDF itself. That is why the conversion of Wikipedia to RDF (done by DBPedia or System One with Wikipedia3) is so helpful.

I anticipate beyond Freebase that other sources, many public, will also become available as RDF or convertible with straightforward translators. We are at the cusp of a veritable explosion of such large-scale, high-quality RDF sources.

The next level of structure extractors are “RDFizers.” These extractors take other internal formats or metadata and convert them to RDF. Depending on the source, more or less structure may be extractable. For example, publishing a Web site with Dublin Core metadata or providing SIOC characterization for a blog (both of which I do for this blog site with available plugins, especially SIOC Plugin by Uldis Bojars or the Zotero COinS Metadata Exposer by Sean Takats), adds considerable structure automatically. For general listings of RDFizers, see my recent OpenLink review or the MIT Simile RDFizer site.

We next come to the general area of direct structure extraction, the least developed — but very exciting — area of gleaning RDF structure. There is a spectrum of challenges here.

At one end of the specturm are documents or Web sources that are published much like regular data records. Like the ZoomInfo listing above or category-oriented sites such as Amazon, eBay or the Internet Movie Data Base (IMDb), information is already presented in a record format with labels and internal HTML structure useful for extraction purposes.

Most of the so-called Web “wrappers” or extractors (such as Zotero’s translators or the Solvent extractor associated with Simile’s Semantic Bank and Piggy Bank) evaluate a Web page’s internal DOM structure or use various regular expression filters and parsers to find and extract info from structure. It is in this manner, for example, that an ISBN number or price can be readily extracted from an Amazon book catalog listing. In general, such tools rely heavily on the partial structure within semi-structured documents for such extractions.

The most challenging type of direct structure extraction is from unstructured documents. Approaches here use a family of possible information extraction (IE) techniques including named entity extraction (proper names and places, for example), event detection, and other structural patterns such as zip codes, phone numbers or email addresses. These techniques are most often applied to standard text, but newer approaches have emerged for images, audio and video.

While IE is the least developed of all structural extraction approaches, recent work is showing it to be possible to do so at scale with acceptable precision, and via semi-automated means. This is a key area of development with tremendous potential for payoff, since 80% to 85% of all content falls into this category.

Structure Extraction with OpenLink is a Snap

In the case of my own blog, I have a relatively well-known framework in WordPress with two resident plugins noted above that do automatic metadata creation in the background. With this minimum of starting material, Kingsley was able to produce two RDF extractions from my blog post using OpenLink’s Virtuoso Sponger (see earlier post). Sponger added to the richness of the baseline RDF extraction by first mapping to the SIOC ontology, followed then by mapping to all tags via the SKOS (simple knowledge organization structure) ontology and to all Web documents via the FOAF (friend-of-a-friend) ontology.

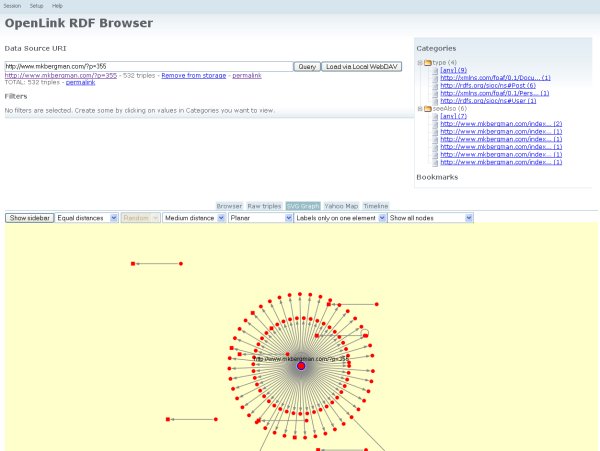

In the case of Kingsley’s first demo using the OpenLink RDF Browser Session (which gives a choice of browser, raw triples, SVG graph, Yahoo map or timeline views), you can do the same yourself for any URL with these steps:

- Go to http://demo.openlinksw.com/DAV/JS/rdfbrowser/index.html

- Enter the URL of your blog post or other page as the ‘Data Source URI’, then

- Go to Session | Save via the menu system or just click on permalink (which produces an URL that is bookmark friendly that can then be kept permanently or shared with others).

It is that simple. You really should use the demo directly yourself.

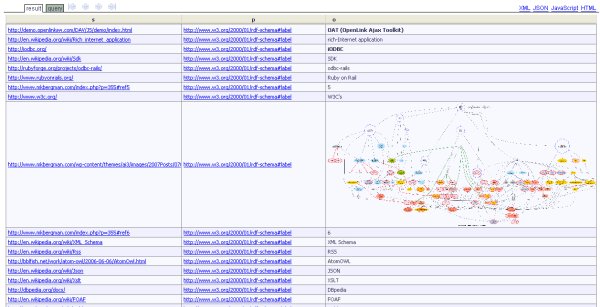

But here is the graph view for my blog post (note we are not really mashing up anything in this example, so the RDF graph structure has few external linkages, resulting in an expected star aspect with the subject resource of my blog post at the center):

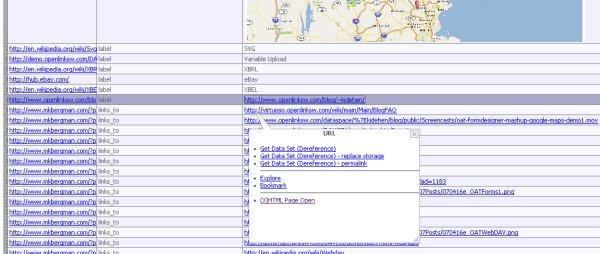

If you then switch to the raw triples view, and are actually working with the live demo, you can click on any URI link within a triple and get a JavaScript popup that gives you these further options:

The ‘Explore’ option on this popup enables you to navigate to that URI, which is often external, and then display its internal RDF triples rather than the normal page. In this manner, you can “skate” across the Web based on any of the linkages within the RDF graph model, navigating based on objects and their relationships and not documents.

The second example Kingsley provided for my write-up was the Dynamic Data Web Page. To create your own, follow these steps:

- Go to http://dbpedia.openlinksw.com:8890/isparql

- Enter your post URL as the Data Source URI

- Execute

- Click on the ‘Post URI’ in the results table, then

- Pick the ‘Dereference’ option (since the data is non-local; otherwise ‘Explore’ would suffice).

Again, it is that simple. Here is an example screenshot from this demo (a poor substitute for working with the live version):

This option, too, also includes the ‘Explore’ popup.

These examples, plus other live demos frequently found on Kingsley’s blog (none of which requires more than your browser), show the power of RDF structuring and what can be done to view data and produce rich interrelationships “on the fly”.

Come, Join in the Fun

With the amount of RDF data now emerging, rapid events are occurring in viewing and posting such structure. Here are some options for you to join in the fun:

- Go ahead and test and view your own URLs using the OpenLink demo sites noted above

- Check out the new DBpedia faceted search browser created by Georgi Kobilarov, one of the founding members of the project. It will soon be announced; keep tabs on Georgi’s Web site

- And, review this post by Patrick Gosetti-Murrayjohn and Jim Groom on ways to use the structure and searching power of RDF to utilize tags on blogs. They combined RSS feeds from about 10 people with some SIOC data using RAP and Dave Beckett’s Triplr. This example shows how RSS feeds are themselves a rich source of structure.

Michael,

Much obliged for the kind mention! And once again you’ve offered up more reasons for excitement about structured web developments. Seeing the demos of how structured web approaches can make navigating data easier–even enjoyable!–will surely help new projects develop.

Thanks,

Patrick