Most of the Effort in Coding is in the Planning

With the environment in place, it is now time to plan the project underlying this Cooking with Python and KBpedia series. This installment formally begins Part II in our CWPK installments.

Recall from the outset that our major objectives of this initiative, besides learning Python and gaining scripts, were to manage and exploit the KBpedia knowledge graph, to expose its build and test procedures so that extensions or modifications to the baseline KBpedia may be possible by others, and to apply KBpedia to contemporary challenges in machine learning, artificial intelligence, and data interoperability. These broad objectives help to provide the organizational backbone to our plan.

We can thus see three main parts to our project. The first part deals with managing, querying, and using KBpedia as distributed. The second part emphasizes the logical build and testing regimes for the graph and how those may be applied to extensions or modifications. The last part covers a variety of advanced applications of KBpedia or its progeny. As we define the tasks in these parts of the plan, we will also identify possible gaps in our current environment that we will need to rectify for progress to continue. Some of these gaps we can identify now and so filling them will be some of our most immediate tasks. Other gaps may only arise as we work through subsequent steps. In those instances we will need to fill the gaps as encountered. Lastly, in terms of scope, while our last part deals with advanced applications that we can term ‘complete’ at some arbitrary number of applications, the truth is that applications are open-ended. We may continue to add to the roster of advanced applications as time and need allows.

Each of these new CWPK installments is available both as an online interactive file

*.ipynb files. The MyBinder service we are using for the online interactive version maintains a Docker image for each project. Depending on how long it has been since someone last requested a CWPK interactive page, sometimes access may be rapid since the image is in cache, or it may take a bit of time to generate another image anew. We discuss this service more in CWPK #57.Part I: Using and Managing KBpedia

Two immediate implications of the project plan arise as we begin to think it through. First, because of our learning and tech transfer objectives for the series, we have the opportunity to rely on the electronic notebook aspects of Jupyter to deliver on these objectives. We thus need to better understand how to mix narrative, working code, and interactivity in our Jupyter Notebook pages. Second, since we need to bridge between Python programs and a knowledge graph written in OWL, we will need some form of application programming interface (API) or bridge between these programmatic and semantic worlds. It, too, is a piece that needs to be put in place at the outset.

This additional foundation then enables us to tackle key use and management aspects for the KBpedia knowledge graph. First among these tasks are the so-called CRUD (create-read-update-delete) activities for the structural components of a knowledge graph:

- Add/delete/modify classes (concepts)

- Add/delete/modify individuals (instances)

- Add/delete/modify object properties

- Add/delete/modify data properties and values

- Add/delete/modify annotations.

We also need to expand upon these basic management functions in areas such as:

- Advanced class specifications

- Advanced property specifications

- Multi-lingual annotations

- Load/save of ontologies (knowledge graphs)

- Copy/rename ontologies.

We also need to put in place means for querying KBpedia and using the SPARQL query language. We can enhance these basics with a rules language, SWRL. Because our use of the knowledge graph involves feeding inputs to third-party machine learners and natural language processors, we need to add scripts for writing outputs to file in various formats. We want to add to this listing some best practices and how we can package our scripts into reusable files and libraries.

Part II: Building, Testing, and Extending the Knowledge Graph

Though KBpedia is certainly usable ‘as is’ for many tasks, importantly including as a common reference nexus for interoperating disparate data, maximum advantage arises when the knowledge graph encompasses the domain problem at hand. KBpedia is an excellent starting point for building such domain ontologies. By definition, the scope, breadth, and depth of a domain knowledge graph will differ from what is already in KBpedia. Some existing areas of KBpedia are likely not needed, others are missing, and connections and entity coverage will differ as well. This part of the project deals with building and logically testing the domain knowledge graph that morphs from the KBpedia starting point.

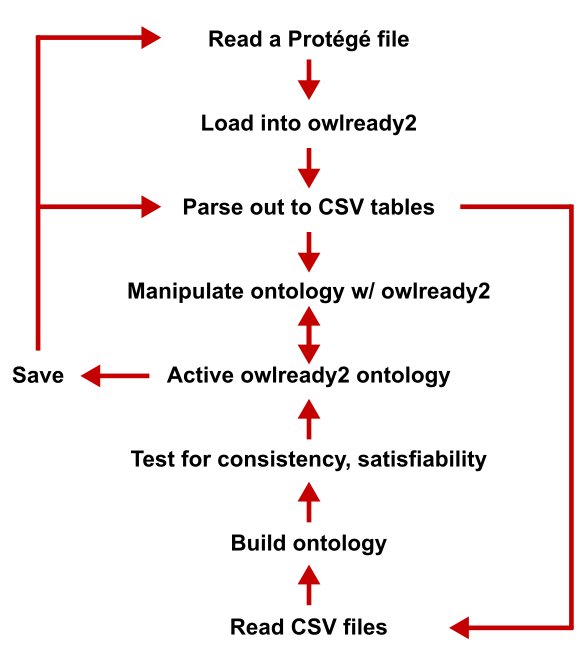

For years now we have built KBpedia from scratch based on a suite of canonically formatted CSV input files. These input files are written in a common UTF-8 encoding and duplicate the kind of tuples found in an N3 (Notation3) RDF/OWL file. As a build progresses through its steps, various consistency and logical tests are applied to ensure the coherence of the built graph. Builds that fail these tests are error flagged, which requires fixes to the input files, before the build can resume and progress to completion. The knowledge graph that passes these logical tests might be used or altered by third-party tools, prominently including Protégé, during the use of and interaction with the graph. We thus also need methods for extracting out the build files from an existing knowledge graph in order to feed the build process anew. These various workflows between graph and build scripts and tools is shown by Figure 1:

This part of the plan will address all steps in this workflow. The use of CSV flat files as the canonical transfer form between the applications also means we need to have syntax and encoding checks in the process. Many of the instructions in this part deal with good practices for debugging and fixing inconsistent or unsatisfied graphs. At least as we have managed KBpedia to date, every new coherent release requires multiple build iterations until the errors are found and corrected. (This area has potential for more automation.)

We will also spend time on the modular design of the KBpedia knowledge graph and the role of (potentially disjoint) typologies to organize and manage the entities represented by the graph. Here, too, we may want to modify individual typologies or add or delete entire ones in transitioning the baseline KBpedia to a responsive domain graph. We thus provide additional installments focused solely on typology construction, modification, and extension. Use and mapping of external sources is essential in this process, but is never cookie-cutter in nature. Having some general scripts available plus knowledge of creating new relevant Python scripts is most helpful to accommodate the diversity found in the wild. Fortunately, we have existing Clojure code for most of these components so that our planning efforts amount more to a refactoring of an existing code base into another language. Hopefully, we will also be able to improve a bit on these existing scripts.

Part III: Advanced Applications

Having full control of the knowledge graph, plus a working toolchest of applications and scripts, is a firm basis to use the now-tailored knowledge graph for machine learning and other advanced applications. The plan here is less clear than the prior two parts, though we have documented existing use cases with code to draw upon. Major installments in this part are likely in creating machine learning training sets, in creating corpora for unsupervised training, generating various types (word, statement, graph) of embedding models, selecting and generating sub-graphs, mapping external vocabularies, categorization, and natural language processing.

Lastly, we reserve a task in this plan for setting up the knowledge graph on a remote server and creating access endpoints. This task is likely to occur at the transition between Parts II and III, though it may prove opportune to do it at other steps along the way.

*.ipynb file. It may take a bit of time for the interactive option to load.