Refining Plans and Directories to Complete the Roundtrip

This installment begins a new major part in our Cooking with Python and KBpedia series. Starting with the format of our extraction files, which can come directly from our prior extraction routines or from other local editing or development efforts, we are now in a position to build a working KBpedia from scratch, and then to test it for consistency and satisfiability. This major part, of all parts in this CWPK series, is the one that most closely reflects our traditional build routines of KBpedia using Clojure.

But this current part is also about more than completing our roundtrip back to KBpedia. For, in bringing new assignments to our knowledge graph, we must also test it to ensure it is encoded properly and that it performs as promised. These additional requirements also mean we will be developing more than a build routine module in this part. The way we are structuring this effort will also add a testing module and a cleaning module (for checking encodings and the like). There are a dozen installments, including this one, in this part to cover this ground.

The addition of more modules, with the plan of still more to come thereafter, also compels us to look at how we are architecting our code and laying out our files. Thus, besides code development, we need to pay attention to organizational matters as well.

Starting a New Major Part

I am pretty pleased with how the cowpoke extraction module turned out, so will be following something of the same pattern to build KBpedia in this part. Since we made the early call to bootstrap our project from the small, core top-level KBpedia Knowledge Ontology (KKO), we gained alot of simplification. That is a good trade-off, since KKO itself is a value-neutral top-level ontology built from the semiotic perspective of C.S. Peirce regarding knowledge representation. Our basic design can also be adopted to any other top-level ontology. If that is your desire, how to bring in a different TLO is up to you to figure out, though I hope that would be pretty easy following the recipes in these CWPK installments.

Fortunately as we make the turn to build routines in this part of the roundtrip, we are walking ground that we have been traveling for nearly a decade. We understand the build process and we understand the testing and acceptance criteria necessary to result in quality, publicly-released knowledge graphs. We try to bring these learnings to our functions in this part.

But as I caution at the bottom of each of these installments, I am learning Python myself through this process. I am by no means a knowledgeable programmer, let alone an expert. I am an interested amateur who has had the good fortune to have worked with some of the best developers imaginable, and I only hope I picked up little bits of some good things here and there about how to approach a coding project. Chances are great you can improve on the code offered. I also, unfortunately, do not have the coding experience to provide commercial-grade code. Errors that should be trapped are likely not, cases that need to be accommodated are likely missed, and generalization and code readability is likely not what it could be. I’ll take that, if the result is to help others walk these paths at a better and brisker pace. Again, I hope you enjoy . . .

Organizing Objectives

I have learned some common-sense lessons through the years about how to approach a software project. One of those lessons, obvious through this series itself, is captured by John Bytheway’s quote, “Inch by inch, life’s a cinch. Yard by yard, life’s hard.” Knowing where you want to go and taking bite-sized chunks to get there almost always leads to some degree of success if you are willing to stick with the journey.

Another lesson is to conform to community practice. In the case of Python (and most modern languages, I assume), applications need to be ‘packaged’ in certain ways such that they can be readily brought into the current computing environment. From the source-code perspective, this means conforming to the ‘package’ standard and the organization of code into importable modules. All of this suggests a code base that is kept separate from any project that uses it, and organized and packaged in a way similar to other applications in that language.

An interesting lesson about knowledge graphs is that they are constantly changing — and need to do so. In this regard, knowledge artifacts are not terribly different than software artifacts. Both need to be updated frequently such that versioning and version control are essential. Versioning tells users the basis for the artifact; version control helps to maintain the versions and track differences between releases. Wherever we decide to store our artifacts, they should be organized and packaged such that different versions may be kept integral. Thus, we organize our project results under version number labels.

Then, in terms of this particular project where roundtripping is central and many outputs are driven from the KBpedia knowledge structure, I also thought it made sense to establish separate tracks between inputs (the ‘build’ side) and outputs (the ‘extraction’ side) and to seek parallelisms between the tracks where it makes sense. This informs much of the modular architecture put forward.

All of this needs to be supplemented with utilities for testing, logging, error trapping and messaging, and statistics. These all occur at the time of build or use, and so belong to this leg of our roundtrip. We will not develop code for all of these aspects in this part of the CWPK series, but we will try to organize in anticipation of these requirements for a complete project. We’ll fill in many of those pieces in major parts to come.

Anticipated Directory Structures

These considerations lead to two different directory structures. The first is where the source code resides, and is in keeping with typical approaches to Python packages. Two modules are in-progress, at least at the design level, to complete our roundtripping per the objectives noted above. Two modules in logging and statistics are likely to get started in this current part. And another three are anticipated for efforts with KBpedia to come before we complete this CWPK series. Here is that updated directory structure, with these new modules noted in red:

|-- PythonProject

|-- Python

|-- [Anaconda3 distribution]

|-- Lib

|-- site-packages

|-- [many]

|-- cowpoke

|-- __init__.py |-- __main__.py |-- analytics.py # anticipated new module |-- build.py # in-progress module |-- clean.py # in-progress module |-- config.py |-- embeddings.py# anticipated new module

|-- extract.py |-- graphics.py # anticipated new module |-- logs.py # likely new module |-- stats.py # likely new module |-- utils.py # likely new module

|-- More

|-- More

In contrast, we need a different directory structure to host our KBpedia project, in which inputs to building KBpedia (‘build_ins’) are in one main branch, the results of a build (‘targets) are in another main branch, ‘extractions’ from the current version in a third, and a fourth (‘outputs’) are the results of post-build use of the knowledge graph. Further, these four main branches are themselves listed under their respective version number. This design means individual versions may be readily zipped or shared on GitHub in a versioned repository.

(NB: The ‘sandbox’ directory below we have referenced many times in this CWPK series, and is unique to it. It houses some of the example starting files needed for this series. We will continue to use the ‘sandbox’ as one of our main directory options.)

As of this current stage in our work, here then is how the project-related directory structures currently look for KBpedia:

|-- PythonProject

|-- kbpedia

|-- sandbox

|-- v250

|-- etc.

|-- v300

|-- build_ins

|-- classes

|-- classes_struct.csv

|-- classes_annot.csv

|-- fixes

|-- TBD

|-- TBD

|-- mappings

|-- etc.

|-- etc.

|-- ontologies

|-- kbpedia-reference-concepts.owl

|-- kko.owl

|-- properties

|-- annotation_properties

|-- data_properties

|-- object_properties

|-- stubs

|-- kbpedia-reference-concepts.owl

|-- kko.owl

|-- typologies

|-- ActionTypes

|-- Agents

|-- etc.

|-- working

|-- TBD

|-- TBD

|-- extractions

|-- classes

|-- classes_struct.csv

|-- classes_annot.csv

|-- mappings

|-- etc.

|-- etc.

|-- properties

|-- annotation_properties

|-- data_properties

|-- object_properties

|-- typologies

|-- ActionTypes

|-- Agents

|-- etc.

|-- outputs

|-- analytics

|-- TBD

|-- TBD

|-- embeddings

|-- TBD

|-- TBD

|-- training_sets

|-- TBD

|-- TBD

|-- targets

|-- logs

|-- TBD

|-- TBD

|-- mappings

|-- etc.

|-- etc.

|-- ontologies

|-- kbpedia-reference-concepts.owl

|-- kko.owl

|-- stats

|-- TBD

|-- TBD

|-- typologies

|-- ActionTypes

|-- Agents

|-- etc.

|-- Python

|-- etc.

|-- etc.

|-- More

You will notice that nearly all ‘extractions’ categories are in the ‘build’ categories as well, reflecting the roundtrip nature of the design. Some of the output categories remain a bit speculative. This area is likely the one to see further refinement as we proceed.

Some of the directories shown, such as ‘analytics’, ’embeddings’, ‘mappings’, and ‘training_sets’ are placeholders for efforts to come. One directory, ‘working’, is a standard one we have adopted over the years to place all of the background working files (some of an intermediate nature leading to the formal build inputs) in one location. Thus, as we progress version-to-version, we can look to this directory to help remind us of the primary activities and changes that were integral to that particular build. When it comes time for a public release, we may remove some of these working or intermediate directories to what is published at GitHub, but we retain this record locally to help document our prior work.

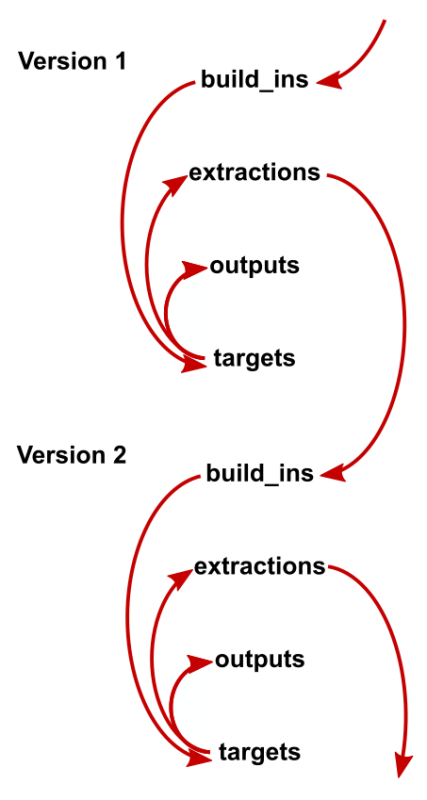

Overall, then, what we have is a build that begins with extractions from a prior build or starting raw files, with those files being modified within the ‘build_ins’ directory during development. Once the build input files are ready, the build processes are initiated to write the new knowledge graph to the ‘targets’ directory. Once the build has met all logic and build tests, it is then the source for new ‘extractions’ to be used in a subsequent build, or to conduct analysis or staging of results for other ‘outputs’. Figure 3 is an illustration of this workflow:

A new version thus starts by copying the directory structure to a new version branch, and copying over the extractions and stubs from the prior version.

We now have the framework for moving on to the next installment in our CWPK series, wherein we begin the return leg of the roundtrip, what is shown as the ‘build_ins’ → ‘targets’ path in Figure 3.

*.ipynb file. It may take a bit of time for the interactive option to load.