Trying to Overcome Some Performance Problems and Extend into Property Structure

Up until the last installment in this Cooking with Python and KBpedia series, everything we did performed quickly in our interactive tests. However, we have encountered some build bottlenecks with our full build routines. If we use the single Generals typology (under which all other KBpedia typologies reside and includes all class structure definitions), the full build requires 700 minutes! Worse, if we actually loop over all of the constituent typology files (and exclude the Generals typology), the full build requires 620 minutes! Wow, that is unacceptable. Fortunately, we do not need to loop over all typology files, but this poor performance demands some better ways to approach things.

So, as we continue our detour to a full structure build, I wanted to test some pretty quick options. I also thought some of these tests have use in their own right apart from these performance questions. Tests with broader usefulness we will add to a new utils module in cowpoke. Some of the tests we will look at include:

- Add a memory add-in to Jupyter Notebook

- Use sqlite3 datastore rather than entirely in-memory

- Saving and reloading between passes

- Removing duplicates in our input build files

- Creating a unique union of class specifications across typologies, or

- Some other ideas that we are deferring for now.

After we tweak the system based on these tests, we resume our structure building quest, now including KBpedia properties, to complete today’s CWPK installment.

Standard Start-up

We invoke our standard start-up functions. We have updated our ‘full’ switch to ‘start’, and have cleaned out some earlier initiatizations that were not actually needed:

from owlready2 import *

from cowpoke.config import *

# from cowpoke.__main__ import *

import csv

import types

world = World()

kb_src = every_deck.get('kb_src') # we get the build setting from config.py

#kb_src = 'standard' # we can also do quick tests with an override

if kb_src is None:

kb_src = 'standard'

if kb_src == 'sandbox':

kbpedia = 'C:/1-PythonProjects/kbpedia/sandbox/kbpedia_reference_concepts.owl'

kko_file = 'C:/1-PythonProjects/kbpedia/sandbox/kko.owl'

elif kb_src == 'standard':

kbpedia = 'C:/1-PythonProjects/kbpedia/v300/targets/ontologies/kbpedia_reference_concepts.owl'

kko_file = 'C:/1-PythonProjects/kbpedia/v300/build_ins/stubs/kko.owl'

elif kb_src == 'start':

kbpedia = 'C:/1-PythonProjects/kbpedia/v300/build_ins/stubs/kbpedia_rc_stub.owl'

kko_file = 'C:/1-PythonProjects/kbpedia/v300/build_ins/stubs/kko.owl'

else:

print('You have entered an inaccurate source parameter for the build.')

skos_file = 'http://www.w3.org/2004/02/skos/core'

(Note: As noted earlier, when we move these kb_src build instructions to a module, we also will add another ‘extract’ option and add back in the cowpoke.__main__ import statement.)

kb = world.get_ontology(kbpedia).load()

rc = kb.get_namespace('http://kbpedia.org/kko/rc/')

#skos = world.get_ontology(skos_file).load()

#kb.imported_ontologies.append(skos)

#core = world.get_namespace('http://www.w3.org/2004/02/skos/core#')

kko = world.get_ontology(kko_file).load()

kb.imported_ontologies.append(kko)

kko = kb.get_namespace('http://kbpedia.org/ontologies/kko#')Just to make sure we have loaded everything necessary, we can test for whether one of the superclasses for properties is there:

print(kko.eventuals)kko.eventuals

Some Performance Improvement Tests

To cut to the bottom line, if you want to do a full build of KBpedia (as you may have configured it), the fastest approach is to use the Generals typology, followed by whatever change supplements you may have. (See this and later installments.) As we have noted, a full class build will take about 70 minutes on a conventional desktop using the Generals typology.

However, not all builds are full ones, and in trying to improve on performance we have derived a number of utility functions that may be useful in multiple areas. I detail the performance tests, the routines associated with them, and the code developed for them in this section. Note that most of these tests have been placed into the utils module of cowpoke.

Notebook Memory Monitor

I did some online investigations of memory settings for Python, which I previously reported is apparently not readily settable and not really required. I did not devote huge research time, but I was pleased to see that Python has a reputation of grabbing as much memory as it needs up to local limits, and also apparently releasing memory and doing fair garbage clean-up. There are some modules to expose more and give more control, but my sense is that was not a productive path to alter Python directly for the KBpedia project.

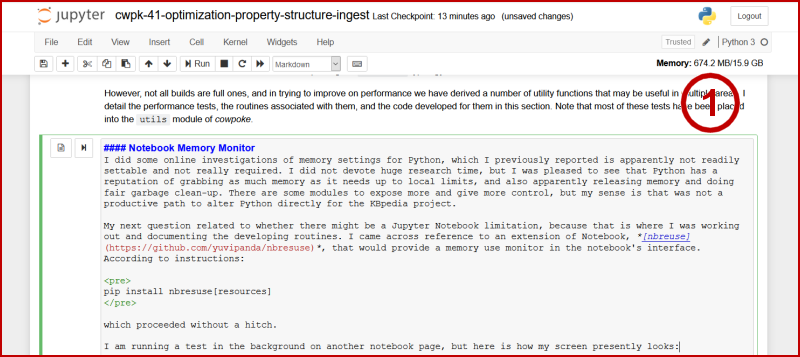

My next question related to whether there might be a Jupyter Notebook limitation, because that is where I was working out and documenting the developing routines. I came across reference to an extension of Notebook, nbreuse, that would provide a memory use monitor in the notebook’s interface. According to instructions:

pip install nbresuse[resources]

which proceeded without a hitch.

I am running a test in the background on another notebook page, but here is how my screen presently looks:

When I first start an interactive session with KBpedia the memory demand is about 150 MB. Most processes demand about 500 MB, and rarely do I see a value over 1 GB, all well within my current limits (16 GB of RAM, with perhaps as much as 8 GB available). So, I have ruled out an internal notebook issue, though I have chosen to keep the extension installed because I like the memory use feedback.

Using a Persistent Datastore

One observation from owlready2’s developer, Jean-Baptiste Lamy, is that it sometimes speeds up some operations for larger knowledge graphs when the ontology is made persistent. Normally, owlready2 tries to keep the entire graph in memory. One makes an ontology persistent by calling the native owlready2 datastore, sqlite3, and then relates it (in an unencumbered sense, which is our circumstance) to the global (‘default_world‘) namespace:

default_world.set_backend(filename='C:/1-PythonProjects/kbpedia/v300/build_ins/working/kb_test.sqlite3')

This is a good command to remember and does indeed save the state, but I did not notice any improvements to the specific KBpedia load times.

Saving Files Between Passes

The class_struct_build function presented in the prior CWPK installment splits nicely into three passes: define the new class; add parents to the class; then remove the redundant rdfs:subClassOf owl:Thing assignment. I decided to open and close files between passes to see if perhaps a poor memory garbage clean-up or other memory issue was at play.

We do see that larger memory models cause a slowdown in performance after some apparent internal limit, as witnessed when the very large typologies cross some performance threshold. Unfortunately, opening and closing files between passes had no notable effect on processing times.

Duplicates Removal

A simple cursory inspection of an extracted ontology file indicates multiple repeat rows (triple statements). If we are seeing longer than desired load times, why not reduce the overall total number of rows that need to be processed? Further, it would be nice, anyway, to have a general utility for reducing duplicate rows.

There are many ways one might approach such a problem with Python, but the method that appealed most to me is to read the rows in as a really simple approach. We simply define our ingested row (taken in its entirety as a complete triple statement) as being a member of a list newrows = [], and then check to see whether it has been ingested before or not. That’s it!

We embed these simple commands in the looping framework we developed in the last installment:

def dup_remover(**build_deck):

print('Beginning duplicate remover routine . . .')

loop_list = build_deck.get('loop_list')

loop = build_deck.get('loop')

base = build_deck.get('base')

base_out = build_deck.get('base_out')

ext = build_deck.get('ext')

for loopval in loop_list:

print(' . . . removing dups in', loopval)

frag = loopval.replace('kko.','')

in_file = (base + frag + ext)

newrows = [] # set list to empty

with open(in_file, 'r', encoding='utf8') as input:

is_first_row = True

reader = csv.DictReader(input, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

for row in reader:

if row not in newrows: # if row is new, then:

newrows.append(row) # add it!

out_file = (base_out + frag + ext)

with open(out_file, 'w', encoding='utf8', newline='') as output:

is_first_row = True

writer = csv.DictWriter(output, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

writer.writerows(newrows)

print('File dup removals now complete.') Again, if your environment is set up right (and pay attention to the new settings in config.py!), you can run:

dup_remover(**build_deck)The dup_remover function takes about 1 hour to run on a conventional desktop cycling across all available typologies. The few largest typologies take the bulk of time. More than half of the smallest typologies run in under a minute. This profile shows that prior to some memory threshold that performance screams, but larger sets (as we have seen elsewhere) require much longer periods of time. Two of the largest typologies, Generals and Manifestations, each take about 8 minutes to run.

(For just occasional use, this looks acceptable to me. If it continues to be too lengthy, my next test would be to ingest the rows as set members. Members of a Python set are unique, are intended to be immutable when defined, and are hashed for greater retrieval speed. You can’t use this approach if maintaining row order (or set member) order is important, but in our case, it does not matter what order our class structure triples are ingested. If I refactor this function, I will first try this approach.)

These runs across all KBpedia typologies show that nearly 12% of all rows across the files are duplicate ones. Because of the lag in performance at larger sizes, removal of duplicates probably makes best sense for the largest typologies, and ones you expect to use multiple times, in order to justify the upfront time to remove duplicates.

We will place this routine in the utils module.

Unions and Intersections Across Typologies

In the same vein as removing duplicates within a typology, as our example just above did, we can also look to remove duplicates across a group of typologies. By using the set notation just discussed, we can also do intersections or other set operations. These kinds of operations have applications beyond duplicate checking down the road.

It is also the case that I can do a cross-check against the descendants in the General typology (see CWPK #28 for a discussion of the .descendants()). While I assume this typology (and it should!) contains all of the classes and parental definitions in KBpedia outside of KKO, I can do a union across all non-General typologies and check if they actually do.

So, with these arguments suggesting the worth of a general routine, we again pick up on our looping construct, and do both unions and intersections across an input deck of typologies. Because it is a bit simpler, we begin with unions:

def set_union(**build_deck):

print('Beginning set union routine . . .') # Note 1

loop_list = build_deck.get('loop_list')

loop = build_deck.get('loop')

base = build_deck.get('base')

short_base = build_deck.get('short_base')

base_out = build_deck.get('base_out')

ext = build_deck.get('ext')

f_union = (short_base + 'union' + ext)

filetemp = open(f_union, 'w+', encoding='utf8') # Note 2

filetemp.truncate(0)

filetemp.close()

input_rows = []

union_rows = []

first_pass = 0 # Note 3

for loopval in loop_list:

print(' . . . evaluating', loopval, 'using set union operations . . .')

frag = loopval.replace('kko.','')

f_input = (base + frag + ext)

with open(f_input, 'r', encoding='utf8') as input_f: # Note 4

is_first_row = True

reader = csv.DictReader(input_f, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

for row in reader:

if row not in input_rows:

input_rows.append(row)

if first_pass == 0: # Note 3

union_rows = input_rows

with open(f_union, 'r', encoding='utf8', newline='') as union_f: # Note 5

is_first_row = True

reader = csv.DictReader(union_f, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

for row in reader:

if row not in union_rows:

if row not in input_rows:

union_rows.append(row)

union_rows = input_rows + union_rows # Note 6

with open(f_union, 'w', encoding='utf8', newline='') as union_f:

is_first_row = True

u_writer = csv.DictWriter(union_f, delimiter=',', fieldnames=['id', 'subClassOf', 'parent'])

u_writer.writerows(union_rows) # Note 5

first_pass = 1

print('Set union operation now complete.') Assuming your system is properly configured and you have run the start-up routines above, you can now Run the function (again passing the build settings from config.py):

set_union(**build_deck)The beginning of this routine (1) is patterned after some of our prior routines. We do need to add creating an empty file or clearing out the prior one (‘union’) as we start the routine (2). We give it the mode of ‘w+’ because we may either be writing (creating) or reading it, depending on prior state. We also need to set a flag (3) so that we populate our first pass with the contents of the first file (since it is a union with itself).

We begin with the first file on our input list (4), and then loop over the next files in our list as new inputs to the routine. Each pass we continue to add to the ‘union’ file that is accumulating from prior passes (5). It is kind of amazing to think that all of this machinery is necessary to get to the simple union operation (6) at the core of the routine.

Here is now the intersection counterpart to that method:

def set_intersection(**build_deck):

print('Beginning set intersection routine . . .')

loop_list = build_deck.get('loop_list')

loop = build_deck.get('loop')

base = build_deck.get('base')

short_base = build_deck.get('short_base')

base_out = build_deck.get('base_out')

ext = build_deck.get('ext')

f_intersection = (short_base + 'intersection' + ext) # Note 1

filetemp = open(f_intersection, 'w+', encoding='utf8')

filetemp.truncate(0)

filetemp.close()

first_pass = 0

for loopval in loop_list:

print(' . . . evaluating', loopval, 'using set intersection operations . . .')

frag = loopval.replace('kko.','')

f_input = (base + frag + ext)

input_rows = set() # Note 2

intersection_rows = set()

with open(f_input, 'r', encoding='utf8') as input_f:

input_rows = input_f.readlines()[1:] # Note 3

with open(f_intersection, 'r', encoding='utf8', newline='') as intersection_f:

if first_pass == 0:

intersection_rows = input_rows

else:

intersection_rows = intersection_f.readlines()[1:]

intersection = list(set(intersection_rows) & set(input_rows)) # Note 2

with open(f_intersection, 'w', encoding='utf8', newline='') as intersection_f:

intersection_f.write('id,subClassOf,parent\n')

for row in intersection:

intersection_f.write('%s' % row) # Note 4

first_pass = 1

print('Set intersection operation now complete.') We have the same basic tee-up (1) as the prior routine, except we have changed our variable names from ‘union’ to ‘intersection’. I also wanted to use a set notation for dealing with intersections, so we needed to change our iteration basis (2) to sets, and the intersection algorithm also changed form. However, dealing with sets in the csv module reader proved to be too difficult for my skill set, since the row object of the csv module takes the form of a dictionary. So, I reverted to the standard reader and writer in Python (3), which enables us to read lines as a single list object. By going that route, however, we needed to start our iterator on row 2 to skip the header (of (‘id‘, ‘subPropertyOf‘, ‘parent‘)). Also, remember, item 1 is a 0 in Python, which is why the additional [1:] argument is added. Using the standard writer also means we need to iterate our write statement (4) over the set, with the older %s format allowing us to insert the row value as a string.

Again, assuming we have everything set up and configured properly, we can Run:

set_intersection(**build_deck)Of course, the intersection of many datasets often results in empty (null) results. So, you are encouraged to use this utility with care and likely use the custom_dict specification in config.py for your specifications.

Transducers

One of the innovations in Clojure, our primary KBpedia development language, are transducers. The term is a portmanteau of ‘transform reducers’ and is a way to generalize and reduce the number of arguments in a tuple or iterated object. Transducers produce fast, processible data streams in a simple functional form, and can also be used to create a kind of domain-specific language (DSL) for functions. Either input streams or data vectors can be transformed in real time or offline to an internal representation. We believe transducers are a key source of the speed of our Clojure KBpedia build implementation.

Quick research suggests there are two leading options for transducers in Python. One was developed by Rich Hickey and Cognitect, Rich’s firm to support and promote Clojure, which he originated. Here are some references:

- The transducers-python GitHub project

- ReadTheDocs documentation

- Documentation

- Transducers are Coming, by Rich Hickey.

The second option embraces the transducer concept, but tries to develop it in a more ‘pythonic’ way:

- The python-transducers GitHub project

- The PyPi project description

- ReadTheDocs documentation

- The developer, Sixty North AS, has put forward a multiple part series of blog posts on Understanding Transducers through Python. I really encourage those interested in both the transducer concept and Python programming constructs to read this series.

I suspect I will return to this topic at a later point, possibly when some of the heavy lifting analytic tasks are underway. For now, I will skip doing anything immediately, even though there are likely noticeable performance benefits. I would rather continue the development of cowpoke without its influence, since transducers are in no way mainstream in the Python community. I will be better positioned to return to this topic after learning more.

Others

We could get fancier still with performance tests and optimizations, especially if we move to something like pandas or the vaex modules. Indeed, as we move forward with our installments, we will have occasion to pull in and document additional modules. For now, though, we have taken some steps to marginally improve our performance sufficient to continue on our KBpedia processing quest.

The Transition to Properties

I blithely assumed that once I got some of the memory and structure tests above behind us, I could readily return to my prior class_struct_builder routine, make some minor setting changes, and apply it to properties. My assumption was not accurate.

I ran into another perflexing morass of ontology namespaces, prefixes, file names, and ontology IRI names, all of which needed to be in sync. What I was doing to create the initial stub form worked, and new things could be brought in while the full ontology was in memory. But, then, however, if the build routine needed to stop, as we just have needed to do between loading classes and loading properties, when started up again the build would fail. The interim ontology version we were saving to file was not writing all of the information available to it in memory to file. Hahaha! Isn’t that how it sometimes works? Just when you are assuming smooth sailing, you hit a brick wall.

Needless to say I got the issue worked out, with a summary of some of my findings on the owlready2 mailing list. Jean-Baptiste Lamy, the developer, is very responsive and I assume some of the specific steps I needed to take in our use case may be generalized better in the software in later versions. Nonetheless, I needed to make those internal modifications, re-do the initial build steps, in order to have the environment properly set to accept new property or class inputs. (In my years of experience with open-source software, one should expect occasional deadends or key parameters needing to be exposed, which will require workarounds. A responsive lead developer with an active project is therefore an important criteria in selecting ‘keystone‘ open-source software.)

After much experimentation, we were finally able to find the right combination of things in the morass. There are a couple of other places on the owlready2 mailing list where these issues are discussed. For now, the logjam has been broken and we can proceed with the property structure build routine.

Property Structure Ingest

Another blithe assumption that did not prove true was to be able to clone the class or typology build routines to properties. There is much different in the circumstances that leads to a different (and simpler) code approach.

First, our extraction routines for properties only resulted in one structural file, not the many files that are characteristic of the classes and typologies. Second, all of our added properties tied directly into kko placeholders. The earlier steps of creating a new class, adding it to a parent, and then deleting the temporary class assignment could be simplified to a direct assignment to a property already in kko. This tremendously simplifies all property structure build steps.

We still need to be attentive to whether a given processing step uses strings or actual types, but nonethless, our property build routines have considerable resemblance to what we have done before.

Still, the shift from classes to properties, different sources for an interim build, and other specific changes suggested it was time to initiate a new practice of listing essential configuration settings as a header to certain key code blocks. We are now getting to the point where there are sufficient moving parts where proper settings before running a code block are essential. Running some codes with wrong settings risks overriding existing data without warning or backup. Again, always back up your current versions before running major routines.

This is the property_struct_builder routine, with code notes explained after the code block:

### KEY CONFIG SETTINGS (see build_deck in config.py) ### # Note 1

# 'kb_src' : 'standard'

# 'loop_list' : custom_dict.values(),

# 'base' : 'C:/1-PythonProjects/kbpedia/v300/build_ins/properties/'

# 'ext' : '.csv',

# 'frag' for starting 'in_file' is specified in routine

def property_struct_builder(**build_deck):

print('Beginning KBpedia property structure build . . .')

kko_list = typol_dict.values()

loop_list = build_deck.get('loop_list')

loop = build_deck.get('loop')

class_loop = build_deck.get('property_loop')

base = build_deck.get('base')

ext = build_deck.get('ext')

if loop is not 'property_loop':

print("Needs to be a 'property_loop'; returning program.")

return

for loopval in loop_list:

print(' . . . processing', loopval)

frag = 'struct_properties' # Note 2

in_file = (base + frag + ext)

with open(in_file, 'r', encoding='utf8') as input:

is_first_row = True

reader = csv.DictReader(input, delimiter=',', fieldnames=['id', 'subPropertyOf', 'parent'])

for row in reader:

if is_first_row:

is_first_row = False

continue

r_id = row['id']

r_parent = row['parent']

value = r_parent.find('owl.')

if value == 0: # Note 3

continue

value = r_id.find('rc.')

if value == 0:

id_frag = r_id.replace('rc.', '')

parent_frag = r_parent.replace('kko.', '')

var2 = getattr(kko, parent_frag) # Note 4

with rc:

r_id = types.new_class(id_frag, (var2,))

print('KBpedia property structure build is complete.')

input.close() IMPORTANT NOTE: To reiterate, I will preface some of the code examples in these CWPK installments with the operating configuraton settings (1) shown at the top of the code listing. This is because simply running some routines based on prior settings to config.py may cause existing files in other directories to be corrupted or overwritten. Putting such notices up I hope will be a better alerting technique than burying the settings within the general narrative. Plus it makes it easier to get a routine up and running sooner.

Unlike for the class or typology builds, our earlier extractions of properties resulted in a single file, which makes our ingest process easier. We are able to set our file input variable of ‘frag’ to a single file variable (2). We also use a different string function of .find (3) to discover if the object assignment is an existing property, which returns a location index number if found, but a ‘-1’ if not. (A boolean option to achieve the same end is in.) And, like we have seen so many times to this point, we also need to invoke a method to evaluate a string value to its underlying type in the system (4).

This new routine allows us to now add properties to our baseline ‘rc’ ontology:

property_struct_builder(**build_deck)And, if we like what we see, we can save it:

kb.save(file=r'C:/1-PythonProjects/kbpedia/v300/targets/ontologies/kbpedia_reference_concepts.owl', format="rdfxml") Well, our detour to deal with performance and other issues proved to be more difficult than when we first started that drive. As I look over the ground covered so far in this CWPK series, these last three installments have taken, on average, three times more time per installment than have all of the prior installments. Things have indeed felt stuck, but I also knew going in that closing the circle on the ’roundtrip’ was going to be one of the more demanding portions. And, so, it has proven. Still: Hooray! Making it fully around the bend is pretty sweet.

We lastly need to clean up a few loose ends on the structural side before we move on to adding annotations. Let’s finish up these structural specs in our next installment.

*.ipynb file. It may take a bit of time for the interactive option to load.