Dealing with the Four Ps to Broaden Actual Use

Dealing with the Four Ps to Broaden Actual Use

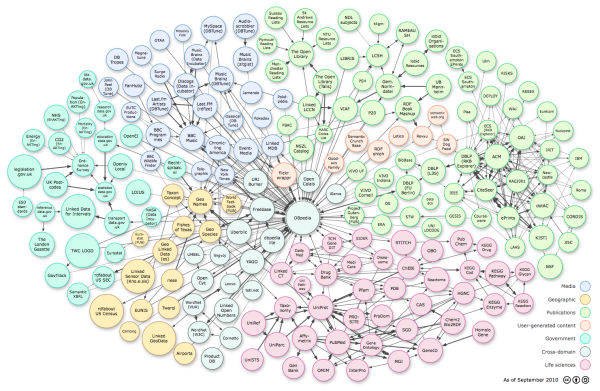

We have to again thank Richard Cyganiak and Anja Jentzsch — as well as all of the authors and publishers of linked open datasets — for the recent update to the linked data cloud diagram [1]. Not only have we seen admirable growth since the last update of the diagram one year ago, but the datasets themselves are now being registered and updated with standard metadata on the CKAN service. Our own UMBEL dataset of reference subject concepts is one of those listed.

Growth and the Linked Data Cloud

The linked open data (LOD) “cloud” diagram and its supporting statistics and archived versions are also being maintained on the http:lod-cloud.net site [1]. This resource, plus the CKAN site and the linked data site maintained by Tom Heath, provide really excellent starting points for those interested in learning more about linked open data. (Structured Dynamics also provides its own FAQ sheet with specific reference to linked data in the enterprise, including both open and proprietary data.)

As an approach deserving its own name, the practice of linked data is about three years old. The datasets now registered as contributing to this cloud are shown by this diagram, last updated about a week ago [1]:

LOD was initially catalyzed by DBpedia and the formation of the Linked Open Data project by the W3C. In the LOD’s first listing in February 2007, four datasets were included with about 40 million total triples. The first LOD cloud diagram was published three years ago (upper left figure below), with 25 datasets consisting of over two billion RDF triples and two million RDF links. By the time of last week’s update, those figures had grown to 203 data sets (qualified from the 215 submitted) consisting of over 25 billion RDF triples and 395 million RDF links [2].

This growth in the LOD cloud over the past three years is shown by these archived diagrams from the LOD cloud site [1]:

|

|

|

|

|

|

2007-10-08

|

2007-11-07 | 2007-11-10 | 2008-02-28 | 2008-03-31 |

|

|

|

|

|

| 2008-09-18 | 2009-03-05 | 2009-03-27 | 2009-07-14 | 2010-09-22 |

(click on any to expand)

With growth has come more systematization and standard metadata. The CKAN (comprehensive knowledge archive network) is especially noteworthy by providing a central registry and descriptive metadata for the contributing datasets, under the lodcloud group name.

Still, Some Hard Questions

This growth and increase in visibility is also being backed by a growing advocacy community, which were initially academics but has broadened to also include open government advocates and some publishers like the NY Times and the BBC. But, with the exception of some notable sites, which I think also help us understand key success factors, there is a gnawing sense that linked data is not yet living up to its promise and advocacy. Let’s look at this from two perspectives: growth and usage.

Growth

While I find the visible growth in the LOD cloud heartening, I do have some questions:

- Is the LOD cloud growing as quickly as its claimed potential would suggest? I suspect not. Though there has been about a tenfold growth in datasets and triples in three years, this is really from a small base. Upside potential remains absolutely huge

- Is linked data growing faster or slower than other forms of structured data? Notable comparatives here would include structure in internal Google results; XML; JSON; Facebook’s Open Graph Protocol, others

- What is the growth in the use of linked data? Growth in publishing is one thing, but use is the ultimate measure. I suspect that, aside from specific curated communities, uptake has been quite slow (see next sub-section).

Perhaps one of these days I will spend some time researching these questions myself. If others have benchmarks or statistics, I’d love to see them.

Such data would be helpful to put linked data and its uptake in context. My general sense is that while linked data is gaining visible traction, it is still not anywhere close to living up to its promise.

Usage

I am much more troubled by the lack of actual use of linked data. To my knowledge, despite the publication of endpoints and the availability of central access points like Openlink Software’s lod.openlinksw.com, there is no notable service with any traction that is using broad connections across the LOD cloud.

Rather, for anything beyond a single dataset (as is DBpedia), the services that do have usefulness and traction are those that are limited and curated, often with a community focus. Examples of these notable services include:

- The life sciences and biomedical community, which has a history of curation and consensual semantics and vocabularies

- FactForge from Ontotext, which is manually cleaned and uses hand-picked datasets and relationships, all under central control

- Freebase, which is a go-to source for much instance data, but is notorious for its lack of organization or structure

- Limited, focused services such as Paul Houle’s Ookaboo (and, of course, many others), where there is much curation but still many issues with data quality (see below).

These observations lead to some questions:

- Other than a few publishers promoting their own data, are there any enterprises or businesses consuming linked data from multiple datasets?

- Why are there comparatively few numbers of links between datasets in the current LOD cloud?

- What factors are hindering the growth and use of linked data?

We’re certainly not the first to note these questions about linked data. Some point to a need for more tools. Recently others have looked to more widespread use of RDFa (RDF embedded in Web pages) as possible enablers. While these may be helpful, I personally do not see either of these factors as the root cause of the problems.

The Four Ps

Readers of this blog well know that I have been beating the tom-toms for some time regarding what I see as key gaps in linked data practice [3]. The update of the LOD cloud diagram and my upcoming keynote at the Dublin Core (DCMI) DC-2010 conference in Pittsburgh have caused me to try to better organize my thoughts.

I see four challenges facing the linked data practice. These four problems — the four Ps — are predicates, proximity, provision and provenance. Let me explain each of these in turn.

Problem #1: Predicates

For some time, the quality and use of linking predicates with linked data has been simplistic and naïve. This problem is a classic expression of Maslow’s hammer,” if all you have is a hammer, everything looks like a nail.” The most abused linking property (predicate) in this regard is owl:sameAs.

In order to make links or connections with other data, it is essential to understand what the nature is of the subject “thing” at hand. There is much confusion about actual “things” and the references to “things” and what is the nature of a “thing” within linked data [4]. Quite frequently, the use or reference or characterization of “things” between different datasets should not be asserted as exact, but as only approximate to some degree.

So, we might be referring to something that is about, or similar to, or approximate with or some other qualified linkage. Yet the actual semantics of the owl:sameAs predicate is quite exact and one with some of the strongest entailments (what do the semantics mean) defined. For sameAs to be applied correctly, every assertion about the linked object in one dataset must be believed to be true for every assertion about that linked object in the matching dataset; in other words, the two instances are being asserted as identical resources.

One of the most vocal advocates of linked data is Kingsley Idehen, and he perpetuates the misuse of this predicate in a recent mailing list thread. The question had been raised about a geographical location in one dataset that mistakenly put the target object into the middle of a lake. To address this problem, Kingsley recommended:

You have two data spaces: [AAA] and [BBB], you should make a third — yours, which I think you have via [CCC].

The point here is not to pick on Kingsley, nor even to solely single out owl:sameAs as a source of this problem of linking predicates. After all, it is reasonable to want to relate two objects to one another that are mostly (and putatively) about the same thing. So we grab the best known predicate at hand.

The real and broader issue of linked data at present is firstly, actual linking predicates are often not used. And, then, secondly, when they are used, their semantics are too often wrong or misleading.

We do not, for example, have sufficient and authoritative linking predicates to deal with these “sort of” conditions. It is a key semantic gap in the linked data vocabulary at present. Just as SKOS was developed as a generalized vocabulary for modeling taxonomies and simple knowledge structures, a similar vocabulary is needed for predicates that reflect real-world usage for linking data objects and datasets with one another [5].

The idea, of course, with linked data resides in the term linked. And linkage means how we represent the relation between objects in different datasets. Done right, this is the beauty and power of linked data and offers us the prospect of federating information across disparate sources on the Web.

For this vision, then, to actually work, links need to be asserted and they need to be asserted correctly. If they are not, then all we are doing is shoveling triples over the fence.

Problem #2: Proximity (or, “is About”)

Going back to our first efforts with UMBEL, a vocabulary of about 20,000 subject concepts based on the Cyc knowledge base [6], we have argued the importance of using well-defined reference concepts as a way to provide “aboutness” and reference hooks for related information on the Web. These reference points become like stars in constellations, helping to guide our navigation across the sea of human knowledge.

While we have put forward UMBEL as one means to provide these fixed references, the real point has been to have accepted references of any manner. These may use UMBEL, alternatives to UMBEL, or multiples thereof. Without some fixity, preferable of a coherent nature, it is difficult to know if we are sailing east or west. And, frankly, there can and should be multiple such reference structures, including specific ones for specific domains. Mappings can allow multiple such structures to be used in an overlapping manner depending on preference.

When one now looks at the LOD cloud and its constituent datasets, it should be clear that there are many more potential cross-dataset linkages resident in the data than the diagram shows. Reference concepts with appropriate linking predicates are the means by which the relationships and richness of these potential connections can be drawn out of the constituent data.

The use of reference vocabularies is rejected by many in the linked data community for what we believe to be misplaced ideological or philosophical grounds. Saying that something is “about” Topic A (or even Topics B and C in different reference vocabularies) does not limit freedom nor make some sort of “ontological commitment“. There is also no reason why free-form tagging systems (folksonomies) can also not be mapped over time to one or many reference structures to help promote interoperability. Like any language, our data languages can benefit from one or more dictionaries of nouns upon which we can agree.

Linked data practitioners need to decide whether their end goal is actual data interoperability and use, or simply publishing triples to run up the score.

Problem #3: Provision of Useful Information

We somewhat controversially questioned the basis of how some linked data was being published in an article late last year, When Linked Data Rules Fail [4]. Amongst other issues raised in the article, one involved publishing large numbers of government datasets without any schema, definitions or even data labels for numerically IDed attributes. We stated in part:

Some of these problems have now been fixed in the subject datasets, but in this circumstance and others we still see way too many instances within the linked data community of no definitions of terms, no human readable labels and the lack of other information by which a user of the data may gauge its meaning, interpretation or semantics. Shame on these publishers.

Really, in the end, the provision of useful information comes down to the need to answer a simple question: Link what?

The what is an essential component to staging linked data for actual use and interoperability. Without it, there is no link in linked data.

Problem #4: Provenance

There are two common threads in the earlier problems. One, semantics matter, because after all that is the arena in which linked data operates. And, second, some entities need to exert the quality control, completeness and consistency that actually enables this information to be dependable.

Both of these threads intersect in the idea of provenance.

This assertion should not be surprising — the standard Web needed some consistent attention with respect to directories and search engines. That linked data or the Web of data is no different, perhaps even more demanding, should be expected.

When we look to those efforts that are presently getting traction in the linked data arena (with some examples above), we note that all of them have quality control and provenance at their core. I think we can also say that only individual datasets that themselves adhere to quality and consistency will even be considered for inclusion in these curated efforts.

Where Will the Semantics Leadership Emerge?

The current circumstance of the semantic Web is that adequate languages and standards are now in place. We also see with linked data that techniques are now being worked out and understood for exposing usable data.

But what appears to be lacking are the semantics and reference metadata under which real use and interoperability take place. The W3C and its various projects have done an admirable job of putting the languages and standards in place and raising the awareness of the potential of linked data. We can now fortunately ask the question: What organizations have the authority to establish the actual vocabularies and semantics by which these standards can be used effectively?

When we look at the emerging and growing LOD cloud we see potential written with a capital P. If the problem areas discussed in this article — the contrasting four Ps — are not addressed, there is a real risk that the hard-earned momentum of linked data to date will dissipate. We need to see real consumption and real use of linked data for real problems in order for the momentum to be sustained.

Of the four Ps, I believe three of them require some authoritative leadership. The community of linked data needs to:

- Find responsive predicates

- Publish reference concepts as proximate aids to orient and align data , and

- Do so with the provenance of an authoritative voice.

When we boil down all of the commentary above a single question remains: Where will the semantic leadership emerge?

Mike,

First off, I don’ think you are picking on me. You picked a good example, but you misinterpreted the following:

1. My intent – which is 100% of the time about stimulating discussion (so I was deliberately succinct)

2. My views about “owl:sameAs” – I’ve never been (or will be) an advocate for “owl:sameAs” misuse, I raise concerns about misuse each time I encounter it.

Clarification about my LOD mailing list post follow.

Paul has (or had) a problem with regards to obtaining accurate data from DBpedia or Freebase for his Ookaboo data space. I believe this was specifically about geo points etc.

My suggestions to Paul:

1. make a new data space (could be Ookaboo or another);

2. in the new data space create accurate data (as he sees it);

3. since you know the Names of the problem Entities in Both DBpedia and Freebase, use “owl:sameAs” in the more accurate data space for coreference which enables the use of “inference context” to conditionally deliver “union expansion” in situations where accuracy requirements are lower;

4. share you newly curated data with the burgeoning Web of Linked Data by publishing from a data space such that each of these newer and more accurate Entities are endowed with Resolvable Names (typically HTTP re. RDF based Linked Data) that basically carry your insignia and also form the underlying basis for Attribution and (if you so choose) monetization re. Linked Data value chain contribution.

Important point re. “owl:sameAs”, cofererence, and inference contexts:

Virtuoso has always been about conditional entailment of “owl:sameAs” assertions within the context of SPARQL queries. I have never given an “owl:sameAs” demo or made a comment about said subject without “conditional entailments” at the back of my mind 🙂

I hope I’ve cleared up this matter. Naturally, the lod.openlinksw.com instance of the LOD cloud cache is a nice place to experiment and explore “conditional owl:sameAs entailments” against as massive Linked Data Space. It also amplifies what the real issue is i.e. conditional entailments, using backward chained reasoning, at massive scale (e.g., 17 Billion Triples).

Your concerns are well founded, but my recent LOD mailing list comments are not your exemplar; especially as I am advocating the same thing as you which boils down to this: use “owl:sameAs” with caution.

Also, and more importantly, we still share common agreement about the following: Linked Data isn’t about the ABox solely, there is a much more powerful TBox dimension that most ignore, due to the capabilities shortfalls of many platforms in the Linked Data Deployment realm.

Kingsley

Hi Kingsley,

Thanks for the clarification, and I am glad you took my comments in your normal spirit of constructive dialog and discussion.

However, I am still perplexed about what appears to me to be a new construct: “conditional entailment”. I’m not familiar with that in any of the specs. And, if Virtuoso is conditioning its entailments, where is that documented? What are the conditions? Does Virtuoso apply different conditions to different predicates? different named graphs?

I know that various vendors (including Openlink) have created their own extensions to SPARQL, that also tend to somewhat track one another, and which are (hopefully) informing the next spec. Is a similar thing going on with inferencing and entailments?

Any pointers on this would be helpful.

Thanks, again, Kingsley.

Mike

My current thinking is that you should be consistant in the use of sameAs in a single dataset.

If a 3rd party chooses to honor your sameAs declarations depends if their current application of the data is similar to.

For example, if you create a database of which movies have comic book characters in, then all batman URIs are the same. If you are doing a database of what tools and weapons comic book characters use, then the batman in different movies and writers are distinct.

There is not one truth for all.

Mike,

We use pragmas in our SPARQL extensions to make entailments conditional. Here are the steps:

1. Put rules in a graph (typically this is inherent in an ontology so an ontology can suffice e.g. umbel)

2. Associate this ontology graph with a named rule

3. When issuing a SPARQL query simply add a pragma that tells Virtuoso to use a name rule.

When the above is done, you end up with a SPARQL query applied to a working set that’s the product of backward chained reasoning. Thus, the entailments from the backward chained reasoning context become the basis of the eventual SPARQL solution (query result set). Take away the inference rules pragma and there is no reasoning whatsoever. This has been the case since 2007.

i have never commented about “owl:sameAs” without SPARQL pragmas factoring into my world view.

Some examples:

1. http://lists.w3.org/Archives/Public/public-lod/2009May/0067.html — mailing list post about “owl:sameAs” and how we make the entailments conditional via Virtuoso SPARQL pragmas

2. http://www.mail-archive.com/public-lod@w3.org/msg00870.html — example using UMBEL

3. http://www.mail-archive.com/dbpedia-discussion@lists.sourceforge.net/msg01210.html — not “owl:sameAs” specific but showing conditional entailments use re. fixing DBpedia ABox data .

Kingsley

.

Hi Kingsley,

I’m really going to be dense here, because I’m still not sure we are communicating.

First, of your three examples, only the first one is explicitly about owl:sameAs, and that one merely does a string lookup to assert a sameAs relationship. (Probably itself a questionable practice.) I don’t believe my question about “conditional entailments” that your raised in relation to sameAs are addressed by any of these three examples.

Second, in my original post, what I was criticizing was the use of asserting owl:sameAs to two external datasets from the cleaned one (what I called [CCC] and was Paul’s Ookaboo in your original response). The problem I see in this case is the entailment that the third dataset [CCC] is making to *both* [AAA] (DBpedia) and [BBB] (Freebase) viz the owl:sameAs assertion. Via OWL semantics, I believe this to be wrong and a misuse of the predicate as defined in the specifications.

I think actually that the idea of a “conditional entailment”, properly defined and published so the semantics are clear, is one approach, though again I still have open questions about what you mean by this and where it is defined for this circumstance. Also, maybe some SWRL stuff could accomplish a similar intent. But, what I was really advocating in my article was to define new predicates that actually capture the intended relationship.

Unless I am really dense here (always a distinct possibility 😉 ), I still believe that your advocacy for owl:sameAs in Paul’s circumstance is wrong.

Best, Mike

Mike,

<<>

I don’t know how you arrived at the string lookup conclusion. Why would we be doing that? Pragmas instruct the underlying Virtuoso compiler.

When the inference context pragma is enabled we are going to perform create a working set that would include union expansion of the owl:sameAs triples i.e. de-reference the local objects of owl:sameAs triples and then (if a crawl pragma is used) actually de-reference over HTTP (e.g. Web of Linked Data if the URIs leads us in that direction). After all of that we also use Transitivity to set direction fo the union that’s used to make the working set.

What do I mean by all of this?

Virtuoso will not effect an owl:sameAs claim just because it’s asserted in a triple. You have to explicitly tell it to do so via a Pragma.

Simple example:

owl:sameAs .

If pragmas for inference context and crawling are enabled my working set will included a union of the graphs associated with the “S” and the “O” re. above triple.

if i disable the crawler pragma, and “S” is local to my data space while “O” is remote, I stil get the benefit of coreference such that the solution for the following queries are identical:

DESCRIBE .

DESCRIBE .

If I turn off all pragmas, and “S” is local the statement above will not be identical since corefrence is not discerned since inference context is off.

The good, bad, and ugly re. “owl:sameAs” has and will always ge conditional re. Virtuoso. I can’t speak for other RDF stores.

Back to my suggestion to Paul:

You are basically saying that the following is wrong:

Go make your own data collection that deals with inaccuracies, if you so choose, publish it to the Web so that others can get high quality data from yor data space while using your URIs as a mechanism for conveying your imprint which keeps you hooked into the data consumption value chain. Also, as you do in real life, reference your sources (so link your URIs to the relevant DBpedia and Freebase URIs).

Basically you are saying that he can’t have: URI for that is owl;samAs , . Of course he can, and that’s his call to make.

My specific response to Paul was a journey towards discovering a platform that performs “owl:sameAs” reasoning conditionally (which he isn’t using otherwise he would have resolved his dilema) . And therein lies the point of contention (as I see it) since this behavior isn’t the norm. That said, I haven’t seen full union expansion of owl:sameAs as the norm either, since following your-nose via a browser through “S” or “O” URIs ins’t what I am talking about, far from it. This is about producing a solution for a SPARQL query with or without union expansion (that includes full or partial transitive closure).

Key principle in play here is this re. Virtuoso and its SPARQL pragmas for inference rules: give people rope, even enough for them to hang themselves with, but don’t forget to make this reality optional 🙂

Kingsley

Mike,

The two incomplete DESCRIBES where supposed to show you two SPARQL queries that may or may not do a variety of things based on Virtuoso SPARQL pragmas. Here are the examples.

DESCRIBE <acct:kidehen@id.myopenlink.net> .

DESCRIBE <http://kingsley.idehen.net/dataspace/person/kidehen#this> .

Hopefully, post readers can insert into my comments above.

Kingsley

Mike,

A cleaned up response without some typos and escaping problems etc..

**

First, of your three examples, only the first one is explicitly about owl:sameAs, and that one merely does a string lookup to assert a sameAs relationship. (Probably itself a questionable practice.) I don’t believe my question about “conditional entailments” that your raised in relation to sameAs are addressed by any of these three examples.

**

I don’t know how you arrived at the string lookup conclusion. Why would we be doing that? Pragmas instruct the underlying Virtuoso compiler.

When the inference context pragma is enabled we are going to perform create a working set that would include union expansion of the owl:sameAs triples i.e. de-reference the local objects of owl:sameAs triples and then (if a crawl pragma is used) actually de-reference over HTTP (e.g. Web of Linked Data if the URIs leads us in that direction). After all of that we also use Transitivity to set direction fo the union that’s used to make the working set.

What do I mean by all of this?

Virtuoso will not effect an owl:sameAs claim just because it’s asserted in a triple. You have to explicitly tell it to do so via a Pragma.

Example using a Triple pattern:

%lt;http://kingsley.idehen.net/dataspace/person/kidehen#this> owl:sameAs <acct:kidehen@id.myopenlink.net>.

If pragmas for inference context and crawling are enabled my working set will included a union of the graphs associated with the “S” and the “O” re. above triple.

if i disable the crawler pragma, and “S” is local to my data space while “O” is remote, I stil get the benefit of coreference such that the solution for the following queries are identical:

DESCRIBE <http://kingsley.idehen.net/dataspace/person/kidehen#this> .

DESCRIBE <acct:kidehen@id.myopenlink.net> .

If I turn off all pragmas, and “S” is local, the statements above will not produce identical solutions (result sets). Why? Because corefrence is not discerned due inference context being disabled i.e. pragma is “off” and Virtuoso will do nothing.

The good, bad, and ugly re. “owl:sameAs” has and will always be conditional re. Virtuoso. I can’t speak for other RDF stores.

Back to my suggestion to Paul:

You are basically saying that the following is instruction is wrong:

Go make your own data collection that deals with inaccuracies, if you so choose, publish it to the Web so that others can get high quality data from yor data space while using your URIs as a mechanism for conveying your imprint which keeps you hooked into the data consumption value chain. Also, as you do in real life, reference your sources (so link your URIs to the relevant DBpedia and Freebase URIs).

Basically you are saying that he can’t have: FixedParisURI that is owl;samAs NotSoBadParisURI, BadParisURI . Of course he can, and that’s his call to make.

My specific response to Paul was *me* setting him on a journey towards discovering a platform that performs “owl:sameAs” reasoning conditionally (which he isn’t using otherwise he would have resolved his dilema). Basically, therein lies the point of contention (as I see it) since this behavior isn’t the norm. That said, I haven’t seen full union expansion of owl:sameAs as the norm either, since following your-nose via a browser through “S” or “O” URIs isn’t what I am talking about, far from it. This is about producing a solution for a SPARQL query with or without union expansion (that includes full or partial transitive closure).

Key principle in play here is this re. Virtuoso and its SPARQL pragmas for inference context, gives people rope, even enough for them to hang themselves with, but doesn’t forget to make this *virtuoso delivered* reality optional

Kingsley

Hi Kingsley,

Great; thanks so much for being patient and thorough in your responses. This was most helpful.

Mike

Mike,

Here are some links to excerpts from one of our SPARQL tutorials that deals with Virtuoso’s inference functionality (which is driven by our backward-chaining reasoner):

1. http://virtuoso.openlinksw.com/presentations/SPARQL_Tutorials/SPARQL_Tutorials_Part_2/SPARQL_Tutorials_Part_2.html#(60) — owl:sameAs pragma example, results will vary based existence of pragma

2. http://virtuoso.openlinksw.com/presentations/SPARQL_Tutorials/SPARQL_Tutorials_Part_2/SPARQL_Tutorials_Part_2.html#(42) — other examples that go beyond owl:sameAs .

Kingsley